Journal of Machine Learning Research 25 (2024) 1-67 Submitted 4/23; Revised 10/23; Published 1/24

Heterogeneous-Agent Reinforcement Learning

Yifan Zhong

1,2,∗

zhongyif[email protected]

Jakub Grudzien Kuba

3,∗

jakub.gr[email protected]c.uk

Xidong Feng

4,∗

Siyi Hu

5

Jiaming Ji

1

Yaodong Yang

1,†

yaodong.y[email protected]

1

Institute for Artificial Intelligence, Peking University

2

Beijing Institute for General Artificial Intelligence

3

University of Oxford

4

University College London

5

ReLER, AAII, University of Technology Sydney

∗

Equal contribution † Corresponding author

Editor: George Konidaris

Abstract

The necessity for cooperation among intelligent machines has popularised cooperative

multi-agent reinforcement learning (MARL) in AI research. However, many research en-

deavours heavily rely on parameter sharing among agents, which confines them to only

homogeneous-agent setting and leads to training instability and lack of convergence guar-

antees. To achieve effective cooperation in the general heterogeneous-agent setting, we

propose Heterogeneous-Agent Reinforcement Learning (HARL) algorithms that resolve

the aforementioned issues. Central to our findings are the multi-agent advantage decompo-

sition lemma and the sequential update scheme. Based on these, we develop the provably

correct Heterogeneous-Agent Trust Region Learning (HATRL), and derive HATRPO and

HAPPO by tractable approximations. Furthermore, we discover a novel framework named

Heterogeneous-Agent Mirror Learning (HAML), which strengthens theoretical guarantees

for HATRPO and HAPPO and provides a general template for cooperative MARL al-

gorithmic designs. We prove that all algorithms derived from HAML inherently enjoy

monotonic improvement of joint return and convergence to Nash Equilibrium. As its natu-

ral outcome, HAML validates more novel algorithms in addition to HATRPO and HAPPO,

including HAA2C, HADDPG, and HATD3, which generally outperform their existing MA-

counterparts. We comprehensively test HARL algorithms on six challenging benchmarks

and demonstrate their superior effectiveness and stability for coordinating heterogeneous

agents compared to strong baselines such as MAPPO and QMIX.

1

Keywords: cooperative multi-agent reinforcement learning, heterogeneous-agent trust

region learning, heterogeneous-agent mirror learning, heterogeneous-agent reinforcement

learning algorithms, sequential update scheme

1. Our code is available at https://github.com/PKU-MARL/HARL.

c

2024 Yifan Zhong, Jakub Grudzien Kuba, Xidong Feng, Siyi Hu, Jiaming Ji, and Yaodong Yang.

License: CC-BY 4.0, see https://creativecommons.org/licenses/by/4.0/. Attribution requirements are provided

at http://jmlr.org/papers/v25/23-0488.html.

Zhong, Kuba, Feng, Hu, Ji, and Yang

1. Introduction

Cooperative Multi-Agent Reinforcement Learning (MARL) is a natural model of learning

in multi-agent systems, such as robot swarms (Hüttenrauch et al., 2017, 2019), autonomous

cars (Cao et al., 2012), and traffic signal control (Calvo and Dusparic, 2018). To solve coop-

erative MARL problems, one naive approach is to directly apply single-agent reinforcement

learning algorithm to each agent and consider other agents as a part of the environment, a

paradigm commonly referred to as Independent Learning (Tan, 1993; de Witt et al., 2020).

Though effective in certain tasks, independent learning fails in the face of more complex

scenarios (Hu et al., 2022b; Foerster et al., 2018), which is intuitively clear: once a learning

agent updates its policy, so do its teammates, which causes changes in the effective environ-

ment of each agent which single-agent algorithms are not prepared for (Claus and Boutilier,

1998). To address this, a learning paradigm named Centralised Training with Decentralised

Execution (CTDE) (Lowe et al., 2017; Foerster et al., 2018; Zhou et al., 2023) was devel-

oped. The CTDE framework learns a joint value function which, during training, has access

to the global state and teammates’ actions. With the help of the centralised value function

that accounts for the non-stationarity caused by others, each agent adapts its policy pa-

rameters accordingly. Thus, it effectively leverages global information while still preserving

decentralised agents for execution. As such, the CTDE paradigm allows a straightforward

extension of single-agent policy gradient theorems (Sutton et al., 2000; Silver et al., 2014)

to multi-agent scenarios (Lowe et al., 2017; Kuba et al., 2021; Mguni et al., 2021). Con-

sequently, numerous multi-agent policy gradient algorithms have been developed (Foerster

et al., 2018; Peng et al., 2017; Zhang et al., 2020; Wen et al., 2018, 2020; Yang et al., 2018;

Ackermann et al., 2019).

Though existing methods have achieved reasonable performance on common bench-

marks, several limitations remain. Firstly, some algorithms (Yu et al., 2022; de Witt et al.,

2020) rely on parameter sharing and require agents to be homogeneous (i.e., share the same

observation space and action space, and play similar roles in a cooperation task), which

largely limits their applicability to heterogeneous-agent settings (i.e., no constraint on the

observation spaces, action spaces, and the roles of agents) and potentially harms the perfor-

mance (Christianos et al., 2021). While there has been work extending parameter sharing

for heterogeneous agents (Terry et al., 2020), their methods rely on padding, which is neither

elegant nor general. Secondly, existing algorithms update the agents simultaneously. As we

show in Section 2.3.1 later, the agents are unaware of partners’ update directions under this

update scheme, which could lead to potentially conflicting updates, resulting in training

instability and failure of convergence. Lastly, some algorithms, such as IPPO and MAPPO,

are developed based on intuition and empirical results. The lack of theory compromises

their trustworthiness for important usage.

To resolve these challenges, in this work we propose Heterogeneous-Agent Reinforce-

ment Learning (HARL) algorithm series, that is meant for the general heterogeneous-agent

settings, achieves effective coordination through a novel sequential update scheme, and is

grounded theoretically.

In particular, we capitalize on the multi-agent advantage decomposition lemma (Kuba

et al., 2021) and derive the theoretically underpinned multi-agent extension of trust region

learning, which is proved to enjoy monotonic improvement property and convergence to

2

Heterogeneous-Agent Reinforcement Learning

the Nash Equilibrium (NE) guarantee. Based on this, we propose Heterogeneous-Agent

Trust Region Policy Optimisation (HATRPO) and Heterogeneous-Agent Proximal Policy

Optimisation (HAPPO) as tractable approximations to theoretical procedures.

Furthermore, inspired by Mirror Learning (Kuba et al., 2022b) that provides a theoretical

explanation for the effectiveness of TRPO and PPO , we discover a novel framework named

Heterogeneous-Agent Mirror Learning (HAML), which strengthens theoretical guarantees

for HATRPO and HAPPO and provides a general template for cooperative MARL algorith-

mic designs. We prove that all algorithms derived from HAML inherently satisfy the desired

property of the monotonic improvement of joint return and the convergence to Nash equi-

librium. Thus, HAML dramatically expands the theoretically sound algorithm space and,

potentially, provides cooperative MARL solutions to more practical settings. We explore the

HAML class and derive more theoretically underpinned and practical heterogeneous-agent

algorithms, including HAA2C, HADDPG, and HATD3.

To facilitate the usage of HARL algorithms, we open-source our PyTorch-based in-

tegrated implementation. Based on this, we test HARL algorithms comprehensively on

Multi-Agent Particle Environment (MPE) (Lowe et al., 2017; Mordatch and Abbeel, 2018),

Multi-Agent MuJoCo (MAMuJoCo) (Peng et al., 2021), StarCraft Multi-Agent Challenge

(SMAC) (Samvelyan et al., 2019), SMACv2 (Ellis et al., 2022), Google Research Football

Environment (GRF) (Kurach et al., 2020), and Bi-DexterousHands (Chen et al., 2022). The

empirical results confirm the algorithms’ effectiveness in practice. On all benchmarks with

heterogeneous agents including MPE, MAMuJoCo, GRF, and Bi-Dexteroushands, HARL

algorithms generally outperform their existing MA-counterparts, and their performance gaps

become larger as the heterogeneity of agents increases, showing that HARL algorithms are

more robust and better suited for the general heterogeneous-agent settings. While all HARL

algorithms show competitive performance, they culminate in HAPPO and HATD3 in par-

ticular, which establish the new state-of-the-art results. As an off-policy algorithm, HATD3

also improves sample efficiency, leading to more efficient learning and faster convergence.

On tasks where agents are mostly homogeneous such as SMAC and SMACv2, HAPPO and

HATRPO attain comparable or superior win rates at convergence while not relying on the

parameter-sharing trick, demonstrating their general applicability. Through ablation analy-

sis, we empirically show the novelties introduced by HARL theory and algorithms are crucial

for learning the optimal cooperation strategy, thus signifying their importance. Finally, we

systematically analyse the computational overhead of sequential update and conclude that

it does not need to be a concern.

2. Preliminaries

In this section, we first introduce problem formulation and notations for cooperative MARL,

and then review existing work and analyse their limitations.

2.1 Cooperative MARL Problem Formulation and Notations

We consider a fully cooperative multi-agent task that can be described as a Markov game

(MG) (Littman, 1994), also known as a stochastic game (Shapley, 1953).

3

Zhong, Kuba, Feng, Hu, Ji, and Yang

Definition 1 A cooperative Markov game is defined by a tuple hN, S, A, r, P, γ, di. Here,

N = {1, . . . , n} is a set of n agents, S is the state space, A = ×

n

i=1

A

i

is the products of all

agents’ action spaces, known as the joint action space. Further, r : S × A → R is the joint

reward function, P : S × A ×S → [0, 1] is the transition probability kernel, γ ∈ [0, 1) is the

discount factor, and d ∈ P(S) (where P(X) denotes the set of probability distributions over

a set X) is the positive initial state distribution.

Although our results hold for general compact state and action spaces, in this paper

we assume that they are finite, for simplicity. In this work, we will also use the notation

P(X) to denote the power set of a set X. At time step t ∈ N, the agents are at state

s

t

; they take independent actions a

i

t

, ∀i ∈ N drawn from their policies π

i

(·

i

|s

t

) ∈ P(A

i

),

and equivalently, they take a joint action a

t

= (a

1

t

, . . . , a

n

t

) drawn from their joint policy

π(·|s

t

) =

Q

n

i=1

π

i

(·

i

|s

t

) ∈ P(A). We write Π

i

, {×

s∈S

π

i

(·

i

|s) |∀s ∈ S, π

i

(·

i

|s) ∈ P(A

i

)} to

denote the policy space of agent i, and Π , (Π

1

, . . . , Π

n

) to denote the joint policy space.

It is important to note that when π

i

(·

i

|s) is a Dirac delta distribution, ∀s ∈ S, the policy

is referred to as deterministic (Silver et al., 2014) and we write µ

i

(s) to refer to its centre.

Then, the environment emits the joint reward r

t

= r(s

t

, a

t

) and moves to the next state

s

t+1

∼ P (·|s

t

, a

t

) ∈ P(S). The joint policy π, the transition probabililty kernel P , and

the initial state distribution d, induce a marginal state distribution at time t, denoted by

ρ

t

π

. We define an (improper) marginal state distribution ρ

π

,

P

∞

t=0

γ

t

ρ

t

π

. The state value

function and the state-action value function are defined as:

V

π

(s) , E

a

0:∞

∼π,s

1:∞

∼P

∞

X

t=0

γ

t

r

t

s

0

= s

and

2

Q

π

(s, a) , E

s

1:∞

∼P,a

1:∞

∼π

∞

X

t=0

γ

t

r

t

s

0

= s, a

0

= a

.

The advantage function is defined to be

A

π

(s, a) , Q

π

(s, a) − V

π

(s).

In this paper, we consider the fully-cooperative setting where the agents aim to maximise

the expected joint return, defined as

J(π) , E

s

0:∞

∼ρ

0:∞

π

,a

0:∞

∼π

"

∞

X

t=0

γ

t

r

t

#

.

We adopt the most common solution concept for multi-agent problems which is that of

Nash equilibrium (NE) (Nash, 1951; Yang and Wang, 2020; Filar and Vrieze, 2012; Başar

and Olsder, 1998), defined as follows.

Definition 2 In a fully-cooperative game, a joint policy π

∗

= (π

1

∗

, . . . , π

n

∗

) is a Nash equi-

librium (NE) if for every i ∈ N, π

i

∈ Π

i

implies J (π

∗

) ≥ J

π

i

, π

−i

∗

.

2. We write a

i

, a, and s when we refer to the action, joint action, and state as to values, and a

i

, a, and s

as to random variables.

4

Heterogeneous-Agent Reinforcement Learning

NE is a well-established game-theoretic solution concept. Definition 2 characterises the

equilibrium point at convergence for cooperative MARL tasks. To study the problem of

finding a NE, we pay close attention to the contribution to performance from different

subsets of agents. To this end, we introduce the following novel definitions.

Definition 3 Let i

1:m

denote an ordered subset {i

1

, . . . , i

m

} of N. We write −i

1:m

to refer

to its complement, and i and −i, respectively, when m = 1. We write i

k

when we refer to the

k

th

agent in the ordered subset. Correspondingly, the multi-agent state-action value function

is defined as

Q

i

1:m

π

s, a

i

1:m

, E

a

−i

1:m

∼π

−i

1:m

Q

π

s, a

i

1:m

, a

−i

1:m

,

In particular, when m = n (the joint action of all agents is considered), then i

1:n

∈ Sym(n),

where Sym(n) denotes the set of permutations of integers 1, . . . , n, known as the symmetric

group. In that case, Q

i

1:n

π

(s, a

i

1:n

) is equivalent to Q

π

(s, a). On the other hand, when

m = 0, i.e., i

1:m

= ∅, the function takes the form of V

π

(s). Moreover, consider two disjoint

subsets of agents, j

1:k

and i

1:m

. Then, the multi-agent advantage function of i

1:m

with respect

to j

1:k

is defined as

A

i

1:m

π

s, a

j

1:k

, a

i

1:m

, Q

j

1:k

,i

1:m

π

s, a

j

1:k

, a

i

1:m

− Q

j

1:k

π

s, a

j

1:k

. (1)

In words, Q

i

1:m

π

s, a

i

1:m

evaluates the value of agents i

1:m

taking actions a

i

1:m

in state

s while marginalizing out a

−i

1:m

, and A

i

1:m

π

s, a

j

1:k

, a

i

1:m

evaluates the advantage of agents

i

1:m

taking actions a

i

1:m

in state s given that the actions taken by agents j

1:k

are a

j

1:k

, with

the rest of agents’ actions marginalized out by expectation. As we show later in Section 3,

these functions allow to decompose the joint advantage function, thus shedding new light

on the credit assignment problem.

2.2 Dealing With Partial Observability

Notably, in some cooperative multi-agent tasks, the global state s may be only partially

observable to the agents. That is, instead of the omniscient global state, each agent can

only perceive a local observation of the environment, which does not satisfy the Markov

property. The model that accounts for partial observability is Decentralized Partially Ob-

servable Markov Decision Process (Dec-POMDP) (Oliehoek and Amato, 2016). However,

Dec-POMDP is proved to be NEXP-complete (Bernstein et al., 2002) and requires super-

exponential time to solve in the worst case (Zhang et al., 2021). To obtain tractable results,

we assume full observability in theoretical derivations and let each agent take actions con-

ditioning on the global state, i.e., a

i

t

∼ π

i

(·

i

|s), thereby arriving at practical algorithms.

In literature (Yang et al., 2018; Kuba et al., 2021; Wang et al., 2023), this is a common

modeling choice for rigor, consistency, and simplicity of the proofs.

In our implementation, we either compensate for partial observability by employing RNN

so that agent actions are conditioned on the action-observation history, or directly use the

MLP network so that agent actions are conditioned on the partial observations. Both of

them are common approaches adopted by existing work, including MAPPO (Yu et al., 2022),

QMIX (Rashid et al., 2018), COMA (Foerster et al., 2018), OB (Kuba et al., 2021), MACPF

(Wang et al., 2023) etc.. From our experiments (Section 5), we show that both approaches

are capable of solving partially observable tasks.

5

Zhong, Kuba, Feng, Hu, Ji, and Yang

2.3 The State of Affairs in Cooperative MARL

Before we review existing SOTA algorithms for cooperative MARL, we introduce two settings

in which the algorithms can be implemented. Both of them can be considered appealing

depending on the application, but their benefits also come with limitations which, if not

taken care of, may deteriorate an algorithm’s performance and applicability.

2.3.1 Homogeneity vs. Heterogeneity

The first setting is that of homogeneous policies, i.e., those where all agents share one set of

policy parameters: π

i

= π, ∀i ∈ N, so that π = (π, . . . , π) (de Witt et al., 2020; Yu et al.,

2022), commonly referred to as Full Parameter Sharing (FuPS) (Christianos et al., 2021).

This approach enables a straightforward adoption of an RL algorithm to MARL, and it does

not introduce much computational and sample complexity burden with the increasing num-

ber of agents. As such, it has been a common practice in the MARL community to improve

sample efficiency and boost algorithm performance (Sunehag et al., 2018; Foerster et al.,

2018; Rashid et al., 2018). However, FuPS could lead to an exponentially-suboptimal out-

come in the extreme case (see Example 2 in Appendix A). While agent identity information

could be added to observation to alleviate this difficulty, FuPS+id still suffers from inter-

ference during agents’ learning process in scenarios where they have different abilities and

goals, resulting in poor performance, as analysed by Christianos et al. (2021) and shown by

our experiments (Figure 8). One remedy is the Selective Parameter Sharing (SePS) (Chris-

tianos et al., 2021), which only shares parameters among similar agents. Nevertheless, this

approach has been shown to be suboptimal and highly scenario-dependent, emphasizing the

need for prior understanding of task and agent attributes to effectively utilize the SePS

strategy (Hu et al., 2022a). More severely, both FuPS and SePS require the observation and

action spaces of agents in a sharing group to be the same, restricting their applicability to

the general heterogeneous-agent setting. Existing work that extends parameter sharing to

heterogeneous agents relies on padding (Terry et al., 2020), which also cannot be generally

applied. To summarize, algorithms relying on parameter sharing potentially suffer from

compromised performance and applicability.

A more ambitious approach to MARL is to allow for heterogeneity of policies among

agents, i.e., to let π

i

and π

j

be different functions when i 6= j ∈ N. This setting has greater

applicability as heterogeneous agents can operate in different action spaces. Furthermore,

thanks to this model’s flexibility they may learn more sophisticated joint behaviors. Lastly,

they can recover homogeneous policies as a result of training, if that is indeed optimal.

Nevertheless, training heterogeneous agents is highly non-trivial. Given a joint reward,

an individual agent may not be able to distill its own contribution to it — a problem known

as credit assignment (Foerster et al., 2018; Kuba et al., 2021). Furthermore, even if an

agent identifies its improvement direction, it may conflict with those of other agents when

not optimised properly. We provide two examples to illustrate this phenomenon.

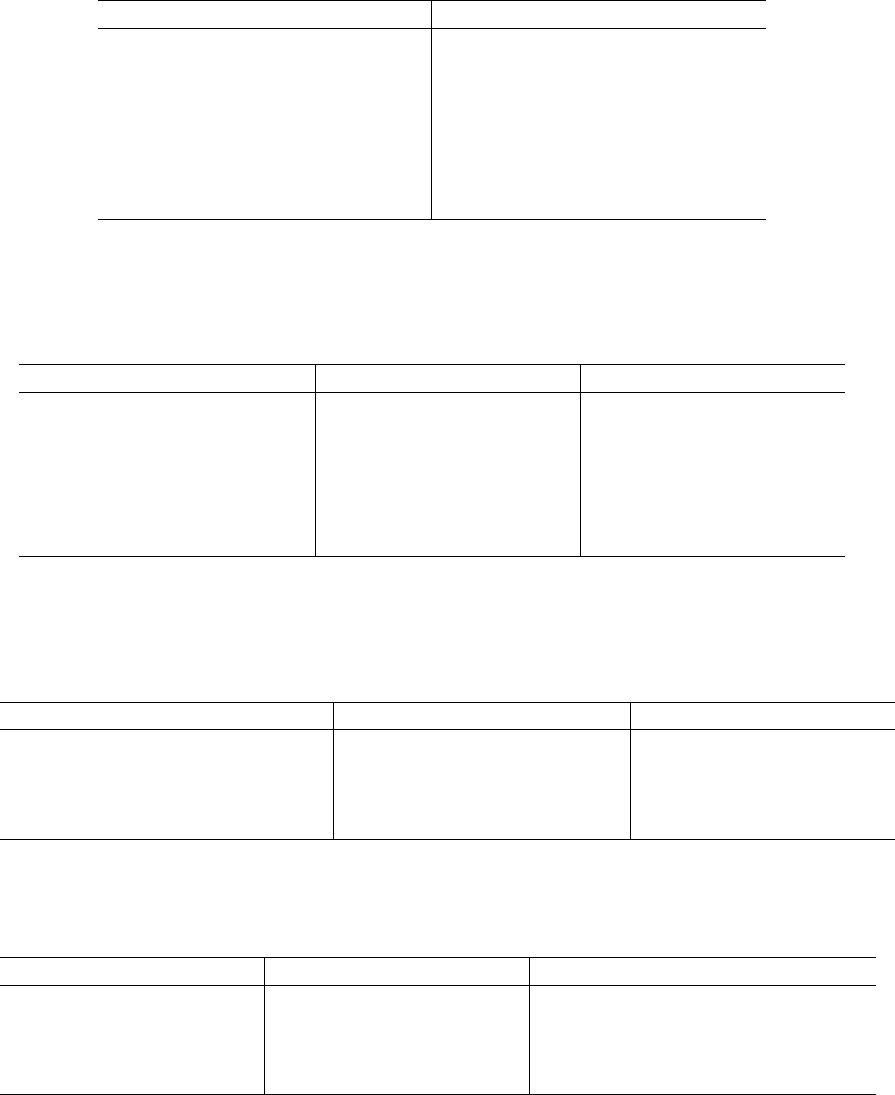

The first one is shown in Figure 1. We design a single-state differentiable game where

two agents play continuous actions a

1

, a

2

∈ R respectively, and the reward function is

r(a

1

, a

2

) = a

1

a

2

. When we initialise agent policies in the second or fourth quadrants and set

a large learning rate, the simultaneous update approach could result in a decrease in joint

6

Heterogeneous-Agent Reinforcement Learning

°2.0 °1.5 °1.0 °0.5 0.0 0.5 1.0 1.5 2.0

Agent 2

°2.0

°1.5

°1.0

°0.5

0.0

0.5

1.0

1.5

2.0

Agent 1

Two-player diÆerentiable game

°4

°3

°2

°1

0

1

2

3

4

Reward Value

Individual update direction

Joint update direction

Initial joint policy

Individual update result

Simultaneous update result

Sequential update result

Figure 1: Example of a two-agent differentiable game with r(a

1

, a

2

) = a

1

a

2

. We initialise

the two policies in the fourth quadrant. Under the straightforward simultaneous update

scheme (red), agent 1 takes a positive update to improve the joint reward, meanwhile agent

2 moves towards the negative axis for the same purpose. However, their update directions

conflict with each other and lead to a decrease in the joint return. By contrast, under our

proposed sequential update scheme (blue), agent 1 updates first, and agent 2 adapts to agent

1’ updated policy, jointly leading to improvement.

reward. In contrast, the sequential update proposed in this paper enables agent 2 to fully

adapt to agent 1’s updated policy and improves the joint reward.

We consider a matrix game with discrete action space as the second example. Our matrix

game is illustrated as follows:

Example 1 Let’s consider a fully-cooperative game with 2 agents, one state, and the joint

action space {0, 1}

2

, where the reward is given by r(0, 0) = 0, r(0, 1) = r(1, 0) = 2, and

r(1, 1) = −1. Suppose that π

i

old

(0) > 0.6 for i = 1, 2. Then, if agents i update their policies

by

π

i

new

= arg max

π

i

E

a

i

∼π

i

,a

−i

∼π

−i

old

A

π

old

(a

i

, a

−i

)

, ∀i ∈ N,

then the resulting policy will yield a lower return,

J(π

old

) > J(π

new

) = min

π

J(π).

This example helpfully illustrates the miscoordination problem when agents conduct

independent reward maximisation simultaneously. A similar miscoordination problem when

heterogeneous agents update at the same time is also shown in Example 2 of Alós-Ferrer

and Netzer (2010).

Therefore, our discussion in this section not only implies that homogeneous algorithms

could have restricted performance and applicability, but also highlight that heterogeneous

algorithms should be developed with extra care when not optimised properly (large learning

7

Zhong, Kuba, Feng, Hu, Ji, and Yang

rate in Figure 1 and independent reward maximisation in Example 1), which could be

common in complex high-dimensional problems. In the next subsection, we describe existing

SOTA actor-critic algorithms which, while often very effective, are still not impeccable, as

they suffer from one of the above two limitations.

2.3.2 Analysis of Existing Work

MAA2C (Papoudakis et al., 2021) extends the A2C (Mnih et al., 2016) to MARL by replacing

the RL optimisation (single-agent policy) objective with the MARL one (joint policy),

L

MAA2C

(π) , E

s∼π,a∼π

A

π

old

(s, a)

, (2)

which computes the gradient with respect to every agent i’s policy parameters, and performs

a gradient-ascent update for each agent. This algorithm is straightforward to implement and

is capable of solving simple multi-agent problems (Papoudakis et al., 2021). We point out,

however, that by simply following their own MAPG, the agents could perform uncoordinated

updates, as illustrated in Figure 1. Furthermore, MAPG estimates have been proved to suffer

from large variance which grows linearly with the number of agents (Kuba et al., 2021), thus

making the algorithm unstable. To assure greater stability, the following MARL methods,

inspired by stable RL approaches, have been developed.

MADDPG (Lowe et al., 2017) is a MARL extension of the popular DDPG algorithm

(Lillicrap et al., 2016). At every iteration, every agent i updates its deterministic policy by

maximising the following objective

L

MADDPG

i

(µ

i

) , E

s∼β

µ

old

h

Q

i

µ

old

s, µ

i

(s)

i

= E

s∼β

µ

old

h

Q

µ

old

s, µ

i

(s), µ

−i

old

(s)

i

, (3)

where β

µ

old

is a state distribution that is not necessarily equivalent to ρ

µ

old

, thus allowing for

off-policy training. In practice, MADDPG maximises Equation (3) by a few steps of gradient

ascent. The main advantages of MADDPG include a small variance of its MAPG estimates—

a property granted by deterministic policies (Silver et al., 2014), as well as low sample

complexity due to learning from off-policy data. Such a combination makes the algorithm

competitive on certain continuous-action tasks (Lowe et al., 2017). However, MADDPG

does not address the multi-agent credit assignment problem (Foerster et al., 2018). Plus,

when training the decentralised actors, MADDPG does not take into account the updates

agents have made and naively uses the off-policy data from the replay buffer which, much

like in Section 2.3.1, leads to uncoordinated updates and suboptimal performance in the face

of harder tasks (Peng et al., 2021; Ray-Team, accessed on 2023-03-14). MATD3 (Ackermann

et al., 2019) proposes to reduce overestimation bias in MADDPG using double centralized

critics, which improves its performance and stability but does not help with getting rid of

the aforementioned limitations.

MAPPO (Yu et al., 2022) is a relatively straightforward extension of PPO (Schulman

et al., 2017) to MARL. In its default formulation, the agents employ the trick of parameter

sharing described in the previous subsection. As such, the policy is updated to maximise

L

MAPPO

(π) , E

s∼ρ

π

old

,a∼π

old

"

n

X

i=1

min

π(a

i

|s)

π

old

(a

i

|s)

A

π

old

(s, a), clip

π(a

i

|s)

π

old

(a

i

|s)

, 1 ±

A

π

old

(s, a)

#

,

(4)

8

Heterogeneous-Agent Reinforcement Learning

where the clip(·, 1 ±) operator clips the input to 1 −/1 + if it is below/above this value.

Such an operation removes the incentive for agents to make large policy updates, thus sta-

bilising the training effectively. Indeed, the algorithm’s performance on the StarCraftII

benchmark is remarkable, and it is accomplished by using only on-policy data. Neverthe-

less, the parameter-sharing strategy limits the algorithm’s applicability and could lead to

its suboptimality when agents have different roles. In trying to avoid this issue, one can

implement the algorithm without parameter sharing, thus making the agents simply take

simultaneous PPO updates meanwhile employing a joint advantage estimator. In this case,

the updates could be uncoordinated, as we discussed in Section 2.3.1.

In summary, all these algorithms do not possess performance guarantees. Altering their

implementation settings to avoid one of the limitations from Section 2.3.1 makes them, at

best, fall into another. This shows that the MARL problem introduces additional complexity

into the single-agent RL setting, and needs additional care to be rigorously solved. With

this motivation, in the next section, we propose novel heterogeneous-agent methods based

on sequential update with correctness guarantees.

3. Our Methods

The purpose of this section is to introduce Heterogeneous-Agent Reinforcement Learning

(HARL) algorithm series which we prove to solve cooperative problems theoretically. HARL

algorithms are designed for the general and expressive setting of heterogeneous agents, and

their essence is to coordinate agents’ updates, thus resolving the challenges in Section 2.3.1.

We start by developing a theoretically justified Heterogeneous-Agent Trust Region Learning

(HATRL) procedure in Section 3.1 and deriving practical algorithms, namely HATRPO

and HAPPO, as its tractable approximations in Section 3.2. We further introduce the novel

Heterogeneous-Agent Mirror Learning (HAML) framework in Section 3.3, which strengthens

performance guarantees of HATRPO and HAPPO (Section 3.4) and provides a general

template for cooperative MARL algorithmsic design, leading to more HARL algorithms

(Section 3.5).

3.1 Heterogeneous-Agent Trust Region Learning (HATRL)

Intuitively, if we parameterise all agents separately and let them learn one by one, then

we will break the homogeneity constraint and allow the agents to coordinate their updates,

thereby avoiding the two limitations from Section 2.3. Such coordination can be achieved,

for example, by accounting for previous agents’ updates in the optimization objective of the

current one along the aforementioned sequence. Fortunately, this idea is embodied in the

multi-agent advantage function A

i

m

π

s, a

i

1:m−1

, a

i

m

which allows agent i

m

to evaluate the

utility of its action a

i

m

given actions of previous agents a

i

1:m−1

. Intriguingly, multi-agent

advantage functions allow for rigorous decomposition of the joint advantage function, as

described by the following pivotal lemma.

9

Zhong, Kuba, Feng, Hu, Ji, and Yang

Figure 2: The multi-agent advantage decomposition lemma and the sequential update

scheme are naturally consistent. The former (upper in the figure) decomposes joint advan-

tage into sequential advantage evaluations, each of which takes into consideration previous

agents’ actions. Based on this, the latter (lower in the figure) allows each policy to be up-

dated considering previous updates during the training stage. The rigor of their connection

is embodied in Lemma 6 and Lemma 13, where multi-agent advantage decomposition lemma

is crucial for the proofs and leads to algorithms that employ sequential update scheme.

Lemma 4 (Multi-Agent Advantage Decomposition) In any cooperative Markov games,

given a joint policy π, for any state s, and any agent subset i

1:m

, the below equation holds.

A

i

1:m

π

s, a

i

1:m

=

m

X

j=1

A

i

j

π

s, a

i

1:j−1

, a

i

j

.

For proof see Appendix B. Notably, Lemma 4 holds in general for cooperative Markov

games, with no need for any assumptions on the decomposability of the joint value function

such as those in VDN (Sunehag et al., 2018), QMIX (Rashid et al., 2018) or Q-DPP (Yang

et al., 2020).

Lemma 4 confirms that a sequential update is an effective approach to search for the

direction of performance improvement (i.e., joint actions with positive advantage values) in

multi-agent learning. That is, imagine that agents take actions sequentially by following an

arbitrary order i

1:n

. Let agent i

1

take action ¯a

i

1

such that A

i

1

π

(s, ¯a

i

1

) > 0, and then, for the

remaining m = 2, . . . , n, each agent i

m

takes an action ¯a

i

m

such that A

i

m

π

(s,

¯

a

i

1:m−1

, ¯a

i

m

) >

0. For the induced joint action

¯

a, Lemma 4 assures that A

π

(s,

¯

a) is positive, thus the

performance is guaranteed to improve. To formally extend the above process into a policy

iteration procedure with monotonic improvement guarantee, we begin by introducing the

following definitions.

Definition 5 Let π be a joint policy, ¯π

i

1:m−1

=

Q

m−1

j=1

¯π

i

j

be some other joint policy of

agents i

1:m−1

, and ˆπ

i

m

be some other policy of agent i

m

. Then

L

i

1:m

π

¯π

i

1:m−1

, ˆπ

i

m

, E

s∼ρ

π

,a

i

1:m−1

∼¯π

i

1:m−1

,a

i

m

∼ˆπ

i

m

A

i

m

π

s, a

i

1:m−1

, a

i

m

.

10

Heterogeneous-Agent Reinforcement Learning

Note that, for any ¯π

i

1:m−1

, we have

L

i

1:m

π

¯π

i

1:m−1

, π

i

m

= E

s∼ρ

π

,a

i

1:m−1

∼¯π

i

1:m−1

,a

i

m

∼π

i

m

A

i

m

π

s, a

i

1:m−1

, a

i

m

= E

s∼ρ

π

,a

i

1:m−1

∼¯π

i

1:m−1

E

a

i

m

∼π

i

m

A

i

m

π

s, a

i

1:m−1

, a

i

m

= 0. (5)

Building on Lemma 4 and Definition 5, we derive the bound for joint policy update.

Lemma 6 Let π be a joint policy. Then, for any joint policy ¯π, we have

J(¯π) ≥ J(π) +

n

X

m=1

L

i

1:m

π

¯π

i

1:m−1

, ¯π

i

m

− CD

max

KL

(π

i

m

, ¯π

i

m

)

,

where C =

4γ max

s,a

|A

π

(s, a)|

(1 − γ)

2

. (6)

For proof see Appendix B.2. This lemma provides an idea about how a joint policy can

be improved. Namely, by Equation (5), we know that if any agents were to set the values of

the above summands L

i

1:m

π

(¯π

i

1:m−1

, ¯π

i

m

) −CD

max

KL

(π

i

m

, ¯π

i

m

) by sequentially updating their

policies, each of them can always make its summand be zero by making no policy update (i.e.,

¯π

i

m

= π

i

m

). This implies that any positive update will lead to an increment in summation.

Moreover, as there are n agents making policy updates, the compound increment can be

large, leading to a substantial improvement. Lastly, note that this property holds with no

requirement on the specific order by which agents make their updates; this allows for flexible

scheduling on the update order at each iteration. To summarise, we propose the following

Algorithm 1.

Algorithm 1: Multi-Agent Policy Iteration with Monotonic Improvement Guar-

antee

Initialise the joint policy π

0

= (π

1

0

, . . . , π

n

0

).

for k = 0, 1, . . . do

Compute the advantage function A

π

k

(s, a) for all state-(joint)action pairs (s, a).

Compute = max

s,a

|A

π

k

(s, a)| and C =

4γ

(1−γ)

2

.

Draw a permutation i

1:n

of agents at random.

for m = 1 : n do

Make an update

π

i

m

k+1

= arg max

π

i

m

h

L

i

1:m

π

k

π

i

1:m−1

k+1

, π

i

m

− CD

max

KL

(π

i

m

k

, π

i

m

)

i

.

We want to highlight that the algorithm is markedly different from naively applying the

TRPO update on the joint policy of all agents. Firstly, our Algorithm 1 does not update the

entire joint policy at once, but rather updates each agent’s individual policy sequentially.

Secondly, during the sequential update, each agent has a unique optimisation objective that

takes into account all previous agents’ updates, which is also the key for the monotonic

improvement property to hold. We justify by the following theorem that Algorithm 1 enjoys

monotonic improvement property.

Theorem 7 A sequence (π

k

)

∞

k=0

of joint policies updated by Algorithm 1 has the monotonic

improvement property, i.e., J(π

k+1

) ≥ J(π

k

) for all k ∈ N.

11

Zhong, Kuba, Feng, Hu, Ji, and Yang

For proof see Appendix B.2. With the above theorem, we claim a successful develop-

ment of Heterogeneous-Agent Trust Region Learning (HATRL), as it retains the monotonic

improvement property of trust region learning. Moreover, we take a step further to prove

Algorithm 1’s asymptotic convergence behavior towards NE.

Theorem 8 Supposing in Algorithm 1 any permutation of agents has a fixed non-zero prob-

ability to begin the update, a sequence (π

k

)

∞

k=0

of joint policies generated by the algorithm,

in a cooperative Markov game, has a non-empty set of limit points, each of which is a Nash

equilibrium.

For proof see Appendix B.3. In deriving this result, the novel details introduced by

Algorithm 1 played an important role. The monotonic improvement property (Theorem

7), achieved through the multi-agent advantage decomposition lemma and the sequential

update scheme, provided us with a guarantee of the convergence of the return. Further-

more, randomisation of the update order ensured that, at convergence, none of the agents

is incentified to make an update. The proof is finalised by excluding the possibility that the

algorithm converges at non-equilibrium points.

3.2 Practical Algorithms

When implementing Algorithm 1 in practice, large state and action spaces could prevent

agents from designating policies π

i

(·|s) for each state s separately. To handle this, we

parameterise each agent’s policy π

i

θ

i

by θ

i

, which, together with other agents’ policies, forms

a joint policy π

θ

parametrised by θ = (θ

1

, . . . , θ

n

). In this subsection, we develop two deep

MARL algorithms to optimise the θ.

3.2.1 HATRPO

Computing D

max

KL

π

i

m

θ

i

m

k

, π

i

m

θ

i

m

in Algorithm 1 is challenging; it requires evaluating the KL-

divergence for all states at each iteration. Similar to TRPO, one can ease this maximal

KL-divergence penalty D

max

KL

π

i

m

θ

i

m

k

, π

i

m

θ

i

m

by replacing it with the expected KL-divergence

constraint E

s∼ρ

π

θ

k

h

D

KL

π

i

m

θ

i

m

k

(·|s), π

i

m

θ

i

m

(·|s)

i

≤ δ where δ is a threshold hyperparameter

and the expectation can be easily approximated by stochastic sampling. With the above

amendment, we propose practical HATRPO algorithm in which, at every iteration k +

1, given a permutation of agents i

1:n

, agent i

m∈{1,...,n}

sequentially optimises its policy

parameter θ

i

m

k+1

by maximising a constrained objective:

θ

i

m

k+1

= arg max

θ

i

m

E

s∼ρ

π

θ

k

,a

i

1:m−1

∼π

i

1:m−1

θ

i

1:m−1

k+1

,a

i

m

∼π

i

m

θ

i

m

A

i

m

π

θ

k

(s, a

i

1:m−1

, a

i

m

)

,

subject to E

s∼ρ

π

θ

k

D

KL

π

i

m

θ

i

m

k

(·|s), π

i

m

θ

i

m

(·|s)

≤ δ. (7)

To compute the above equation, similar to TRPO, one can apply a linear approximation to

the objective function and a quadratic approximation to the KL constraint; the optimisation

12

Heterogeneous-Agent Reinforcement Learning

problem in Equation (7) can be solved by a closed-form update rule as

θ

i

m

k+1

= θ

i

m

k

+ α

j

s

2δ

g

i

m

k

(H

i

m

k

)

−1

g

i

m

k

(H

i

m

k

)

−1

g

i

m

k

, (8)

where H

i

m

k

= ∇

2

θ

i

m

E

s∼ρ

π

θ

k

D

KL

π

i

m

θ

i

m

k

(·|s), π

i

m

θ

i

m

(·|s)

θ

i

m

=θ

i

m

k

is the Hessian of the expected

KL-divergence, g

i

m

k

is the gradient of the objective in Equation (7), α

j

< 1 is a positive

coefficient that is found via backtracking line search, and the product of (H

i

m

k

)

−1

g

i

m

k

can be

efficiently computed with conjugate gradient algorithm.

Estimating E

a

i

1:m−1

∼π

i

1:m−1

θ

k+1

,a

i

m

∼π

i

m

θ

i

m

h

A

i

m

π

θ

k

s, a

i

1:m−1

, a

i

m

i

is the last missing piece for

HATRPO, which poses new challenges because each agent’s objective has to take into ac-

count all previous agents’ updates, and the size of input values. Fortunately, with the fol-

lowing proposition, we can efficiently estimate this objective by a joint advantage estimator.

Proposition 9 Let π =

Q

n

j=1

π

i

j

be a joint policy, and A

π

(s, a) be its joint advantage

function. Let ¯π

i

1:m−1

=

Q

m−1

j=1

¯π

i

j

be some other joint policy of agents i

1:m−1

, and ˆπ

i

m

be

some other policy of agent i

m

. Then, for every state s,

E

a

i

1:m−1

∼¯π

i

1:m−1

,a

i

m

∼ˆπ

i

m

A

i

m

π

s, a

i

1:m−1

, a

i

m

= E

a∼π

h

ˆπ

i

m

(a

i

m

|s)

π

i

m

(a

i

m

|s)

− 1

¯π

i

1:m−1

(a

i

1:m−1

|s)

π

i

1:m−1

(a

i

1:m−1

|s)

A

π

(s, a)

i

.

(9)

For proof see Appendix C.1. One benefit of applying Equation (9) is that agents only

need to maintain a joint advantage estimator A

π

(s, a) rather than one centralised critic for

each individual agent (e.g., unlike CTDE methods such as MADDPG). Another practical

benefit one can draw is that, given an estimator

ˆ

A(s, a) of the advantage function A

π

θ

k

(s, a),

for example, GAE (Schulman et al., 2016), E

a

i

1:m−1

∼π

i

1:m−1

θ

i

1:m−1

k+1

,a

i

m

∼π

i

m

θ

i

m

h

A

i

m

π

θ

k

s, a

i

1:m−1

, a

i

m

i

can be estimated with an estimator of

π

i

m

θ

i

m

(a

i

m

|s)

π

i

m

θ

i

m

k

(a

i

m

|s)

− 1

M

i

1:m

s, a

, where M

i

1:m

=

π

i

1:m−1

θ

i

1:m−1

k+1

(a

i

1:m−1

|s)

π

i

1:m−1

θ

i

1:m−1

k

(a

i

1:m−1

|s)

ˆ

A

s, a

. (10)

Notably, Equation (10) aligns nicely with the sequential update scheme in HATRPO. For

agent i

m

, since previous agents i

1:m−1

have already made their updates, the compound

policy ratio for M

i

1:m

in Equation (10) is easy to compute. Given a batch B of trajectories

with length T , we can estimate the gradient with respect to policy parameters (derived in

Appendix C.2) as follows,

ˆ

g

i

m

k

=

1

|B|

X

τ∈B

T

X

t=0

M

i

1:m

(s

t

, a

t

)∇

θ

i

m

log π

i

m

θ

i

m

(a

i

m

t

|s

t

)

θ

i

m

=θ

i

m

k

.

13

Zhong, Kuba, Feng, Hu, Ji, and Yang

The term −1 · M

i

1:m

(s, a) of Equation (10) is not reflected in

ˆ

g

i

m

k

, as it only introduces a

constant with zero gradient. Along with the Hessian of the expected KL-divergence, i.e.,

H

i

m

k

, we can update θ

i

m

k+1

by following Equation (8). The detailed pseudocode of HATRPO

is listed in Appendix C.3.

3.2.2 HAPPO

To further alleviate the computation burden from H

i

m

k

in HATRPO, one can follow the idea

of PPO by considering only using first-order derivatives. This is achieved by making agent

i

m

choose a policy parameter θ

i

m

k+1

which maximises the clipping objective of

E

s∼ρ

π

θ

k

,a∼π

θ

k

"

min

π

i

m

θ

i

m

(a

i

m

|s)

π

i

m

θ

i

m

k

(a

i

m

|s)

M

i

1:m

(s, a) , clip

π

i

m

θ

i

m

(a

i

m

|s)

π

i

m

θ

i

m

k

(a

i

m

|s)

, 1 ±

M

i

1:m

(s, a)

!#

.

(11)

The optimisation process can be performed by stochastic gradient methods such as Adam

(Kingma and Ba, 2015). We refer to the above procedure as HAPPO and Appendix C.4 for

its full pseudocode.

3.3 Heterogeneous-Agent Mirror Learning: A Continuum of Solutions to

Cooperative MARL

Recently, Mirror Learning (Kuba et al., 2022b) provided a theoretical explanation of the

effectiveness of TRPO and PPO in addition to the original trust region interpretation,

and unifies a class of policy optimisation algorithms. Inspired by their work, we further

discover a novel theoretical framework for cooperative MARL, named Heterogeneous-Agent

Mirror Learning (HAML), which enhances theoretical guarantees of HATRPO and HAPPO.

As a proven template for algorithmic designs, HAML substantially generalises the desired

guarantees of monotonic improvement and NE convergence to a continuum of algorithms

and naturally hosts HATRPO and HAPPO as its instances, further explaining their robust

performance. We begin by introducing the necessary definitions of HAML attributes: the

drift functional.

Definition 10 Let i ∈ N, a heterogeneous-agent drift functional (HADF) D

i

of i

consists of a map, which is defined as

D

i

: Π × Π × P(−i) × S → {D

i

π

(·|s, ¯π

j

1:m

) : P(A

i

) → R},

such that for all arguments, under notation D

i

π

ˆπ

i

|s, ¯π

j

1:m

, D

i

π

ˆπ

i

(·

i

|s)|s, ¯π

j

1:m

(·|s)

,

1. D

i

π

ˆπ

i

|s, ¯π

j

1:m

≥ D

i

π

π

i

|s, ¯π

j

1:m

= 0 (non-negativity),

2. D

i

π

ˆπ

i

|s, ¯π

j

1:m

has all Gâteaux derivatives zero at ˆπ

i

= π

i

(zero gradient).

We say that the HADF is positive if D

i

π

(ˆπ

i

|s, ¯π

j

1:m

) = 0, ∀s ∈ S implies ˆπ

i

= π

i

, and trivial

if D

i

π

(ˆπ

i

|s, ¯π

j

1:m

) = 0, ∀s ∈ S for all π, ¯π

j

1:m

, and ˆπ

i

.

14

Heterogeneous-Agent Reinforcement Learning

Intuitively, the drift D

i

π

(ˆπ

i

|s, ¯π

j

1:m

) is a notion of distance between π

i

and ˆπ

i

, given that

agents j

1:m

just updated to ¯π

j

1:m

. We highlight that, under this conditionality, the same

update (from π

i

to ˆπ

i

) can have different sizes—this will later enable HAML agents to softly

constraint their learning steps in a coordinated way. Before that, we introduce a notion

that renders hard constraints, which may be a part of an algorithm design, or an inherent

limitation.

Definition 11 Let i ∈ N. We say that, U

i

: Π × Π

i

→ P(Π

i

) is a neighbourhood operator

if ∀π

i

∈ Π

i

, U

i

π

(π

i

) contains a closed ball, i.e., there exists a state-wise monotonically non-

decreasing metric χ : Π

i

× Π

i

→ R such that ∀π

i

∈ Π

i

there exists δ

i

> 0 such that

χ(π

i

, ¯π

i

) ≤ δ

i

=⇒ ¯π

i

∈ U

i

π

(π

i

).

For every joint policy π, we will associate it with its sampling distribution—a positive

state distribution β

π

∈ P(S) that is continuous in π (Kuba et al., 2022b). With these

notions defined, we introduce the main definition for HAML framework.

Definition 12 Let i ∈ N, j

1:m

∈ P(−i), and D

i

be a HADF of agent i. The heterogeneous-

agent mirror operator (HAMO) integrates the advantage function as

M

(ˆπ

i

)

D

i

,¯π

j

1:m

A

π

(s) , E

a

j

1:m

∼¯π

j

1:m

,a

i

∼ˆπ

i

h

A

i

π

(s, a

j

1:m

, a

i

)

i

− D

i

π

ˆπ

i

s, ¯π

j

1:m

.

Note that when ˆπ

i

= π

i

, HAMO evaluates to zero. Therefore, as the HADF is non-

negative, a policy ˆπ

i

that improves HAMO must make it positive and thus leads to the

improvement of the multi-agent advantage of agent i. It turns out that, under certain

configurations, agents’ local improvements result in the joint improvement of all agents, as

described by the lemma below, proved in Appendix D.

Lemma 13 (HAMO Is All You Need) Let π

old

and π

new

be joint policies and let i

1:n

∈

Sym(n) be an agent permutation. Suppose that, for every state s ∈ S and every m = 1, . . . , n,

M

(π

i

m

new

)

D

i

m

,π

i

1:m−1

new

A

π

old

(s) ≥

M

(π

i

m

old

)

D

i

m

,π

i

1:m−1

new

A

π

old

(s). (12)

Then, π

new

is jointly better than π

old

, so that for every state s,

V

π

new

(s) ≥ V

π

old

(s).

Subsequently, the monotonic improvement property of the joint return follows naturally,

as

J(π

new

) = E

s∼d

V

π

new

(s)

≥ E

s∼d

V

π

old

(s)

= J(π

old

).

However, the conditions of the lemma require every agent to solve |S| instances of In-

equality (12), which may be an intractable problem. We shall design a single optimisation

objective whose solution satisfies those inequalities instead. Furthermore, to have a practical

application to large-scale problems, such an objective should be estimatable via sampling. To

handle these challenges, we introduce the following Algorithm Template 2 which generates

a continuum of HAML algorithms.

15

Zhong, Kuba, Feng, Hu, Ji, and Yang

Algorithm Template 2: Heterogeneous-Agent Mirror Learning

Initialise a joint policy π

0

= (π

1

0

, . . . , π

n

0

);

for k = 0, 1, . . . do

Compute the advantage function A

π

k

(s, a) for all state-(joint)action pairs (s, a);

Draw a permutaion i

1:n

of agents at random //from a positive distribution

p ∈ P(Sym(n));

for m = 1 : n do

Make an update π

i

m

k+1

= arg max

π

i

m

∈U

i

m

π

k

(π

i

m

k

)

E

s∼β

π

k

h

M

(π

i

m

)

D

i

m

,π

i

1:m−1

k+1

A

π

k

(s)

i

;

Output: A limit-point joint policy π

∞

Based on Lemma 13 and the fact that π

i

∈ U

i

π

(π

i

), ∀i ∈ N, π

i

∈ Π

i

, we can know any

HAML algorithm (weakly) improves the joint return at every iteration. In practical settings,

such as deep MARL, the maximisation step of a HAML method can be performed by a few

steps of gradient ascent on a sample average of HAMO (see Definition 10). We also highlight

that if the neighbourhood operators U

i

can be chosen so that they produce small policy-

space subsets, then the resulting updates will be not only improving but also small. This,

again, is a desirable property while optimising neural-network policies, as it helps stabilise

the algorithm. Similar to HATRL, the order of agents in HAML updates is randomised

at every iteration; this condition has been necessary to establish convergence to NE, which

is intuitively comprehensible: fixed-point joint policies of this randomised procedure assure

that none of the agents is incentivised to make an update, namely reaching a NE. We provide

the full list of the most fundamental HAML properties in Theorem 14 which shows that any

method derived from Algorithm Template 2 solves the cooperative MARL problem.

Theorem 14 (The Fundamental Theorem of Heterogeneous-Agent Mirror Learning)

Let, for every agent i ∈ N, D

i

be a HADF, U

i

be a neighbourhood operator, and let the sam-

pling distributions β

π

depend continuously on π. Let π

0

∈ Π, and the sequence of joint

policies (π

k

)

∞

k=0

be obtained by a HAML algorithm induced by D

i

, U

i

, ∀i ∈ N, and β

π

.

Then, the joint policies induced by the algorithm enjoy the following list of properties

1. Attain the monotonic improvement property,

J(π

k+1

) ≥ J(π

k

),

2. Their value functions converge to a Nash value function V

NE

lim

k→∞

V

π

k

= V

NE

,

3. Their expected returns converge to a Nash return,

lim

k→∞

J(π

k

) = J

NE

,

4. Their ω-limit set consists of Nash equilibria.

See the proof in Appendix E. With the above theorem, we can conclude that HAML

provides a template for generating theoretically sound, stable, monotonically improving

algorithms that enable agents to learn solving multi-agent cooperation tasks.

16

Heterogeneous-Agent Reinforcement Learning

3.4 Casting HATRPO and HAPPO as HAML Instances

In this section, we show that HATRPO and HAPPO are in fact valid instances of HAML,

which provides a more direct theoretical explanation for their excellent empirical perfor-

mance.

We begin with the example of HATRPO, where agent i

m

(the permutation i

1:n

is drawn

from the uniform distribution) updates its policy so as to maximise (in ¯π

i

m

)

E

s∼ρ

π

old

,a

i

1:m−1

∼π

i

1:m−1

new

,a

i

m

∼¯π

i

m

h

A

i

m

π

old

(s, a

i

1:m−1

, a

i

m

)

i

, subject to D

KL

(π

i

m

old

, ¯π

i

m

) ≤ δ.

This optimisation objective can be casted as a HAMO with the HADF D

i

m

≡ 0, and

the KL-divergence neighbourhood operator

U

i

m

π

(π

i

m

) =

n

¯π

i

m

E

s∼ρ

π

h

KL

π

i

m

(·

i

m

|s), ¯π

i

m

(·

i

m

|s)

i

≤ δ

o

.

The sampling distribution used in HATRPO is β

π

= ρ

π

. Lastly, as the agents up-

date their policies in a random loop, the algorithm is an instance of HAML. Hence, it is

monotonically improving and converges to a Nash equilibrium set.

In HAPPO, the update rule of agent i

m

is changed with respect to HATRPO as

E

s∼ρ

π

old

,a

i

1:m−1

∼π

i

1:m−1

new

,a

i

m

∼π

i

m

old

h

min

r(¯π

i

m

)A

i

1:m

π

old

(s, a

i

1:m

), clip

r(¯π

i

m

), 1 ±

A

i

1:m

π

old

(s, a

i

1:m

)

i

,

where r(¯π

i

) =

¯π

i

(a

i

|s)

π

i

old

(a

i

|s)

. We show in Appendix F that this optimisation objective is equivalent

to

E

s∼ρ

π

old

h

E

a

i

1:m−1

∼π

i

1:m−1

new

,a

i

m

∼¯π

i

m

A

i

m

π

old

(s, a

i

1:m−1

, a

i

m

)

− E

a

i

1:m−1

∼π

i

1:m−1

new

,a

i

m

∼π

i

m

old

ReLU

r(¯π

i

m

) − clip

r(¯π

i

m

), 1 ±

A

i

1:m

π

old

(s, a

i

1:m

)

i

.

The purple term is clearly non-negative due to the presence of the ReLU function.

Furthermore, for policies ¯π

i

m

sufficiently close to π

i

m

old

, the clip operator does not activate,

thus rendering r(¯π

i

m

) unchanged. Therefore, the purple term is zero at and in a region

around ¯π

i

m

= π

i

m

old

, which also implies that its Gâteaux derivatives are zero. Hence, it

evaluates a HADF for agent i

m

, thus making HAPPO a valid HAML instance.

Finally, we would like to highlight that these conclusions about HATRPO and HAPPO

strengthen the results in Section 3.1 and 3.2. In addition to their origin in HATRL, we

now show that their optimisation objectives directly enjoy favorable theoretical properties

endowed by HAML framework. Both interpretations underpin their empirical performance.

3.5 More HAML Instances

In this subsection, we exemplify how HAML can be used for derivation of principled MARL

algorithms, solely by constructing valid drift functional, neighborhood operator, and sam-

pling distribution. Our goal is to verify the correctness of HAML theory and enrich the

cooperative MARL with more theoretically guaranteed and practical algorithms. The re-

sults are more robust heterogeneous-agent versions of popular RL algorithms including A2C,

DDPG, and TD3, different from those in Section 2.3.2.

17

Zhong, Kuba, Feng, Hu, Ji, and Yang

Figure 3: This figure presents a simplified schematic overview of HARL algorithms repre-

sented as valid instances of HAML. The complete details are available in Appendix H. By

recasting HATRPO and HAPPO as HAML formulations, we demonstrate that their guaran-

tees pertaining to monotonic improvement and NE convergence are enhanced by leveraging

the HAML framework. Moreover, HAA2C, HADDPG, and HATD3 are obtained by design-

ing HAML components, thereby securing those same performance guarantees. The variety

of drift functionals, neighborhood operators, and sampling distributions utilised by these

approaches further attests to the versatility and richness of the HAML framework.

3.5.1 HAA2C

HAA2C intends to optimise the policy for the joint advantage function at every iteration,

and similar to A2C, does not impose any penalties or constraints on that procedure. This

learning procedure is accomplished by, first, drawing a random permutation of agents i

1:n

,

and then performing a few steps of gradient ascent on the objective of

E

s∼ρ

π

old

,a

i

1:m

∼π

i

1:m

old

h

π

i

1:m−1

new

(a

i

1:m−1

|s)π

i

m

(a

i

m

|s)

π

i

1:m−1

old

(a

i

1:m−1

|s)π

i

m

old

(a

i

m

|s)

A

i

m

π

old

(s, a

i

1:m−1

, a

i

m

)

i

, (13)

with respect to π

i

m

parameters, for each agent i

m

in the permutation, sequentially. In

practice, we replace the multi-agent advantage A

i

m

π

old

(s, a

i

1:m−1

, a

i

m

) with the joint advantage

estimate which, thanks to the joint importance sampling in Equation (13), poses the same

objective on the agent (see Appendix G for full pseudocode).

3.5.2 HADDPG

HADDPG exploits the fact that β

π

can be independent of π and aims to maximise the

state-action value function off-policy. As it is a deterministic-action method, importance

sampling in its case translates to replacement of the old action inputs to the critic with the

new ones. Namely, agent i

m

in a random permutation i

1:n

maximises

E

s∼β

µ

old

h

Q

i

1:m

µ

old

s, µ

i

1:m−1

new

(s), µ

i

m

(s)

i

, (14)

with respect to µ

i

m

, also with a few steps of gradient ascent. Similar to HAA2C, optimising

the state-action value function (with the old action replacement) is equivalent to the original

multi-agent value (see Appendix G for full pseudocode).

18

Heterogeneous-Agent Reinforcement Learning

3.5.3 HATD3

HATD3 improves HADDPG with tricks proposed by Fujimoto et al. (2018). Similar to

HADDPG, HATD3 is also an off-policy algorithm and optimises the same target, but it

employs target policy smoothing, clipped double Q-learning, and delayed policy updates

techniques (see Appendix G for full pseudocode). We observe that HATD3 consistently

outperforms HADDPG on all tasks, showing that relevant reinforcement learning can be

directly applied to MARL without the need for rediscovery, another benefit of the HAML.

As the HADDPG and HATD3 algorithms have been derived, it is logical to consider

the possibility of HADQN, given that DQN can be viewed as a pure value-based version of

DDPG for discrete action problems. In light of this, we introduce HAD3QN, a value-based

approximation of HADDPG that incorporates techniques proposed by Van Hasselt et al.

(2016) and Wang et al. (2016). The details of HAD3QN are presented in Appendix I, which

includes the pseudocode, performance analysis, and an ablation study demonstrating the

importance of the dueling double Q-network architecture for achieving stable and efficient

multi-agent learning.

To elucidate the formulations and differences of HARL approaches in their HAML repre-

sentation, we provide a simplified summary in Figure 3 and list the full details in Appendix

H. While these approaches have already tailored HADFs, neighbourhood operators, and

sampling distributions, we speculate that the entire abundance of the HAML framework

can still be explored with more future work. Nevertheless, we commence addressing the

heterogeneous-agent cooperation problem with these five methods, and analyse their perfor-

mance in Section 5.

4. Related Work

There have been previous attempts that tried to solve the cooperative MARL problem by

developing multi-agent trust region learning theories. Despite empirical successes, most of

them did not manage to propose a theoretically-justified trust region protocol in multi-agent

learning, or maintain the monotonic improvement property. Instead, they tend to impose

certain assumptions to enable direct implementations of TRPO/PPO in MARL problems.

For example, IPPO (de Witt et al., 2020) assumes homogeneity of action spaces for all

agents and enforces parameter sharing. Yu et al. (2022) proposed MAPPO which enhances

IPPO by considering a joint critic function and finer implementation techniques for on-

policy methods. Yet, it still suffers similar drawbacks of IPPO due to the lack of monotonic

improvement guarantee especially when the parameter-sharing condition is switched off.

Wen et al. (2022) adjusted PPO for MARL by considering a game-theoretical approach at the

meta-game level among agents. Unfortunately, it can only deal with two-agent cases due to

the intractability of Nash equilibrium. Recently, Li and He (2023) tried to implement TRPO

for MARL through distributed consensus optimisation; however, they enforced the same

ratio ¯π

i

(a

i

|s)/π

i

(a

i

|s) for all agents (see their Equation (7)), which, similar to parameter

sharing, largely limits the policy space for optimisation. Moreover, their method comes

with a δ/n KL-constraint threshold that fails to consider scenarios with large agent number.

While Coordinated PPO (CoPPO) (Wu et al., 2021) derived a theoretically-grounded joint

objective and obtained practical algorithms through a set of approximations, it still suffers

from the non-stationarity problem as it updates agents simultaneously.

19

Zhong, Kuba, Feng, Hu, Ji, and Yang

One of the key ideas behind our Heterogeneous-Agent algorithm series is the sequential

update scheme. A similar idea of multi-agent sequential update was also discussed in the

context of dynamic programming (Bertsekas, 2019) where artificial "in-between" states have

to be considered. On the contrary, our sequential update scheme is developed based on

Lemma 4, which does not require any artificial assumptions and holds for any cooperative

games. The idea of sequential update also appeared in principal component analysis; in

EigenGame (Gemp et al., 2021) eigenvectors, represented as players, maximise their own

utility functions one-by-one. Although EigenGame provably solves the PCA problem, it is

of little use in MARL, where a single iteration of sequential updates is insufficient to learn

complex policies. Furthermore, its design and analysis rely on closed-form matrix calculus,

which has no extension to MARL.

Lastly, we would like to highlight the importance of the decomposition result in Lemma

4. This result could serve as an effective solution to value-based methods in MARL where

tremendous efforts have been made to decompose the joint Q-function into individual Q-

functions when the joint Q-function is decomposable (Rashid et al., 2018). Lemma 4, in

contrast, is a general result that holds for any cooperative MARL problems regardless of

decomposability. As such, we think of it as an appealing contribution to future developments

on value-based MARL methods.

Our work is an extension of previous work HATRPO / HAPPO, which was originally

proposed in a conference paper (Kuba et al., 2022a). The main additions in our work are:

• Introducing Heterogeneous-Agent Mirror Learning (HAML), a more general theoretical

framework that strengthens theoretical guarantees for HATRPO and HAPPO and can

induce a continuum of sound algorithms with guarantees of monotonic improvement

and convergence to Nash Equilibrium;

• Designing novel algorithm instances of HAML including HAA2C, HADDPG, and

HATD3, which attain better performance than their existing MA-counterparts, with

HATD3 establishing the new SOTA results for off-policy algorithms;

• Releasing PyTorch-based implementation of HARL algorithms, which is more unified,

modularised, user-friendly, extensible, and effective than the previous one;

• Conducting comprehensive experiments evaluating HARL algorithms on six challeng-

ing benchmarks Multi-Agent Particle Environment (MPE), Multi-Agent MuJoCo (MA-

MuJoCo), StarCraft Multi-Agent Challenge (SMAC), SMACv2, Google Research Foot-

ball Environment (GRF), and Bi-DexterousHands.

5. Experiments and Analysis

In this section, we evaluate and analyse HARL algorithms on six cooperative multi-agent

benchmarks — Multi-Agent Particle Environment (MPE) (Lowe et al., 2017; Mordatch and

Abbeel, 2018), Multi-Agent MuJoCo (MAMuJoCo) (Peng et al., 2021), StarCraft Multi-

Agent Challenge (SMAC) (Samvelyan et al., 2019), SMACv2 (Ellis et al., 2022), Google Re-

search Football Environment (GRF) (Kurach et al., 2020), and Bi-DexterousHands (Chen

et al., 2022), as shown in Figure 4 — and compare their performance to existing SOTA

20

Heterogeneous-Agent Reinforcement Learning

Figure 4: The six environments used for testing HARL algorithms.

methods. These benchmarks are diverse in task difficulty, agent number, action type, di-

mensionality of observation space and action space, and cooperation strategy required, and

hence provide a comprehensive assessment of the effectiveness, stability, robustness, and

generality of our methods. The experimental results demonstrate that HAPPO, HADDPG,

and HATD3 generally outperform their MA-counterparts on heterogeneous-agent coopera-

tion tasks. Moreover, HARL algorithms culminate in HAPPO and HATD3, which exhibit

superior effectiveness and stability for heterogeneous-agent cooperation tasks over existing

strong baselines such as MAPPO, QMIX, MADDPG, and MATD3, refreshing the state-of-

the-art results. Our ablation study also reveals that the novel details introduced by HATRL

and HAML theories, namely non-sharing of parameters and randomised order in sequential

update, are crucial for obtaining the strong performance. Finally, we empirically show that

the computational overhead introduced by sequential update does not need to be a concern.

Our implementation of HARL algorithms takes advantage of the sequential update

scheme and the CTDE framework that HARL algorithms share in common, and unifies

them into either the on-policy or the off-policy training pipeline, resulting in modularisation

and extensibility. It also naturally hosts MAPPO, MADDPG, and MATD3 as special cases

and provides the (re)implementation of these three algorithms along with HARL algorithms.

For fair comparisons, we use our (re)implementation of MAPPO, MADDPG, and MATD3 as

baselines on MPE and MAMuJoCo, where their publicly acknowledged performance report

under exactly the same settings is lacking, and we ensure that their performance matches

or exceeds the results reported by their original paper and subsequent papers; on the other

benchmarks, the original implementations of baselines are used. To be consistent with the

officially reported results of MAPPO, we let it utilize parameter sharing on all but Bi-

DexterousHands and the Speaker Listener task in MPE. Details of hyper-parameters and

experiment setups can be found in Appendix K.

5.1 MPE Testbed

We consider the three fully cooperative tasks in MPE (Lowe et al., 2017): Spread, Reference,