The Advanced Computing

Sytems Association

THE USENIX MAGAZINE

FEBRUARY 2008 VOLUME 33 NUMBER 1

OPINION Musings 2

RIK FARROW

SYSADMIN Fear and Loathing in the Routing System 5

JOE ABLEY

From x=1 to (setf x 1): What Does

Configuration Management Mean? 12

ALVA COUCH

http:BL: Taking DNSBL Beyond SMTP 19

ERIC LANGHEINRICH

Centralized Package Management

Using Stork 25

JUSTIN SAMUEL, JEREMY PLICHTA,

AND JUSTIN CAPPOS

Managing Distributed Applications

with Plush 32

JEANNIE ALBRECHT, RYAN BRAUD,

DARREN DAO, NIKOLAY TOPILSKI,

CHRISTOPHER TUTTLE, ALEX C. SNOEREN,

AND AMIN VAHDAT

An Introduction to Logical Domains 39

OCTAVE ORGERON

PROGRAMMING Insecurities in Designing XML Signatures 48

ADITYA K SOOD

COLUMNS Practical Perl Tools: Why I Live at the P.O. 54

DAVID N. BLANK-EDELMAN

Pete’s All Things Sun (PATS):

The Future of Sun 61

PETER BAER GALVIN

iVoyeur: Permission to Parse 65

DAVID JOSEPHSEN

/dev/random 72

ROBERT G. FERRELL

Toward Attributes 74

NICK STOUGHTON

BOOK REVIEWS Book Reviews 78

ÆLEEN FRISCH, BRAD KNOWLES, AND SAM

STOVER

USENIX NOTES 2008 USENIX Nominating Committee

Report 82

MICHAEL B. JONES AND DAN GEER

Summary of USENIX Board of Directors

Meetings and Actions 83

ELLIE YOUNG

New on the USENIX Web Site:

The Multimedia Page 83

ANNE DICKISON

CONFERENCE LISA ’07: 21st Large Installation

SUMMARIES System Administration Conference 84

login_february08_covers.qxp:login covers 1/22/08 1:38 PM Page 1

2ND INTERNATIONAL CONFERENCE ON DISTRIBUTED

EVENT-BASED SYSTEMS (DEBS 2008)

Organized in cooperation with USENIX, the IEEE and IEEE Com-

puter Society, and ACM (SIGSOFT)

JULY 2–4, 2008, ROME, ITALY

http://debs08.dis.uniroma1.it/

Abstract submissions due: March 9, 2008

2008 USENIX/ACCURATE ELECTRONIC

VOTING TECHNOLOGY WORKSHOP (EVT ’08)

Co-located with USENIX Security ’08

JULY 28–29, 2008, SAN JOSE, CA, USA

http://www.usenix.org/evt08

Paper submissions due: March 28, 2008

2ND USENIX WORKSHOP ON OFFENSIVE

TECHNOLOGIES (WOOT ’08)

Co-located with USENIX Security ’08

JULY 28, 2008, SAN JOSE, CA, USA

17TH USENIX SECURITY SYM POSIUM

JULY 28–AUGUST 1, 2008, SAN JOSE, CA, USA

http://www.usenix.org/sec08

3RD USENIX WORKSHOP ON HOT TOPICS IN

SECURITY (HOTSEC ’08)

Co-located with USENIX Security ’08

JULY 29, 2008, SAN JOSE, CA, USA

http://www.usenix.org/hotsec08

Position paper submissions due: May 28, 2008

22ND LARGE INSTALLATION SYSTEM

ADMINISTRATION CONFERENCE (LISA ’08)

Sponsored by USENIX and SAGE

NOVEMBER 9–14, 2008, SAN DIEGO, CA, USA

http://www.usenix.org/lisa08

Extended abstract and paper submissions due: May 8, 2008

8TH USENIX SYMPOSIUM ON OPERATING SYSTEMS

DESIGN AND IMPLEMENTATION (OSDI ’08)

Sponsored by USENIX in cooperation with ACM SIGOPS

DECEMBER 8–10, 2008, SAN DIEGO, CA, USA

http://www.usenix.org/osdi08

Paper submissions due: May 8, 2008

2008 ACM INTERNATIONAL CONFERENCE ON

VIRTUAL EXECUTION ENVIRONMENTS (VEE ’08)

Sponsored by ACM SIGPLAN in cooperation with USENIX

MARCH 5–7, 2008, SEATTLE, WA, USA

http://vee08.cs.tcd.ie

USABILITY, PSYCHOLOGY, AND SECURITY 2008

Co-located with NSDI ’08

APRIL 14, 2008, SAN FRANCISCO, CA, USA

http://www.usenix.org/upsec08

FIRST USENIX WORKSHOP ON LARGE-SCALE

EXPLOITS AND EMERGENT THREATS (LEET ’08)

Botnets, Spyware, Worms, and More

Co-located with NSDI ’08

APRIL 15, 2008, SAN FRANCISCO, CA, USA

http://www.usenix.org/leet08

WORKSHOP ON ORGANIZING WORKSHOPS,

C

ONFERENCES, AND SYMPOSIA FOR COMPUTER

SYSTEMS (WOWCS ’08)

Co-located with NSDI ’08

APRIL 15, 2008, SAN FRANCISCO, CA, USA

http://www.usenix.org/wowcs08

5TH USENIX SYMPOSIUM ON NETWORKED

SYSTEMS DESIGN AND IMPLEMENTATION

(NSDI ’08)

Sponsored by USENIX in cooperation with ACM SIGCOMM

and ACM SIGOPS

APRIL 16–18, 2008, SAN FRANCISCO, CA, USA

http://www.usenix.org/nsdi08

THE SIXTH INTERNATIONAL CONFERENCE ON

MOBILE SYSTEMS, APPLICATIONS, AND SERVICES

(MOBISYS 2008)

Jointly sponsored by ACM SIGMOBILE and USENIX

JUNE 10–13, 2008, BRECKENRIDGE, CO, USA

http://www.sigmobile.org/mobisys/2008/

2008 USENIX ANNUAL TECHNICAL CONFERENCE

JUNE 22–27, 2008, BOSTON, MA, USA

http://www.usenix.org/usenix08

Upcoming Events

For a complete list of all USENIX & USENIX co-sponsored events,

see http://www.usenix.org/events.

login_february08_covers.qxp:login covers 1/17/08 12:11 PM Page 2

contents

VOL. 33, #1, FEBRUARY 2008

EDITOR

Rik Farrow

MANAGING EDITOR

Jane-Ellen Long

COPY EDITOR

David Couzens

PRODUCTION

Casey Henderson

Michele Nelson

TYPESETTER

Star Type

startype@comcast.net

USENIX ASSOCIATION

2560 Ninth Street,

Suite 215, Berkeley,

California 94710

Phone: (510) 528-8649 FAX:

(510) 548-5738

http://www.usenix.org

http://www.sage.org

;login: is the official

magazine of the

USENIX Association.

;login: (ISSN 1044-6397) is

published bi-monthly by the

USENIX Association, 2560

Ninth Street, Suite 215,

Berkeley, CA 94710.

$85 of each member’s annual

dues is for an annual subscrip-

tion to ;login:. Subscriptions for

nonmembers are $120 per year.

Periodicals postage paid at

Berkeley, CA, and additional

offices.

POSTMASTER: Send address

changes to ;login:,

USENIX Association,

2560 Ninth Street,

Suite 215, Berkeley,

CA 94710.

©2008 USENIX Association

USENIX is a registered trade-

mark of the USENIX Associa-

tion. Many of the designations

used by manufacturers and sell-

ers to distinguish their products

are claimed as trademarks.

USENIX acknowledges all trade-

marks herein. Where those des-

ignations appear in this publica-

tion and USENIX is aware of a

trademark claim, the designa-

tions have been printed in caps

or initial caps.

OPINION Musings 2

RIK FARROW

SYSADMIN: Fear and Loathing in the Routing System 5

JOE ABLEY

From x=1 to (setf x 1): What Does

Configuration Management Mean? 12

ALVA COUCH

http:BL: Taking DNSBL Beyond SMTP 19

ERIC LANGHENRICH

Centralized Package Management

Using Stork 25

JUSTIN SAMUEL, JEREMY PLICHTA,

AND JUSTIN CAPPOS

Managing Distributed Applications

with Plush 32

JEANNIE ALBRECHT, RYAN BRAUD,

DARREN DAO, NIKOLAY TOPILSKI,

CHRISTOPHER TUTTLE, ALEX C. SNOEREN,

AND AMIN VAHDAT

An Introduction to Logical Domains 39

OCTAVE ORGERON

PROGRAMMING: Insecurities in Designing XML Signatures 48

ADITYA K SOOD

COLUMNS: Practical Perl Tools: Why I Live at the P.O. 54

DAVID N. BLANK-EDELMAN

Pete’s All Things Sun (PATS):

The Future of Sun 61

PETER BAER GALVIN

iVoyeur: Permission to Parse 65

DAVID JOSEPHSEN

/dev/random 72

ROBERT G. FERRELL

Toward Attributes 74

NICK STOUGHTON

BOOK REVIEWS Book Reviews 78

ÆLEEN FRISCH, BRAD KNOWLES, AND SAM

STOVER

USENIX NOTES 2008 USENIX Nominating Committee

Report 82

MICHAEL B. JONES AND DAN GEER

Summary of USENIX Board of Directors

Meetings and Actions 83

ELLIE YOUNG

New on the USENIX Web Site:

The Multimedia Page 83

ANNE DICKISON

CONFERENCE : LISA ’07: 21st Large Installation

REPORTS System Administration Conference 84

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 1

2 ;LOGIN: VOL. 33, NO. 1

RIK FARROW

musings

THERE ARE TIMES WHEN WE JUST

can’t wait for the future to arrive, such as

the coming of warmer weather. And some-

times it seems that people pine for the

poorly remembered past, as if it were some-

how better than what we face today. Right

now, I want to talk about sysadmins and

ponder whether they are looking ahead

while wishing for an imagined past.

In this issue you will find the summaries for LISA

’07, including the summary I wrote about John

Strassner’s keynote. John spoke about experiences

with a project at Motorola where researchers had

created a functioning example of network auto-

nomics. This is a complex system, with many dif-

ferent active components all contributing to deci-

sions that result in changes in configuration. The

FOCALE architecture (see slide 23 of his presenta-

tion on the LISA ’07 page [1]) has a Context Man-

ager, a Policy Manager, and an Autonomic Manag-

er, as well as a machine learning component, all of

which are involved in controlling the creation and

modifications of device configurations.

FOCALE is a working system. It actually helps to

simplify a terribly complex control setup that in-

cludes seven different groups of administrators

(see slide 4). John carefully began his talk by ex-

plaining the existing situation found in many

telecommunications companies (think cell phone

operators). He explained the limitations of the cur-

rent network management, including the need for

human involvement in analysis before anything

can be done. And he described what he means by

autonomics, going way behind the infamous four

self-functions of self-configuration, self-protection,

self-healing, and self-optimization made famous by

IBM [2, 3]. John considers these benefits, seeing

the way forward via knowledge about component

systems, the context in which they operate, and an

ability to learn and reason, to follow policy deter-

mined from business rules, and to adapt offered

services and resources as necessary.

I thought John’s talk described groundbreaking re-

search, where a real autonomic system was work-

ing to make a network function more smoothly.

But others at the conference weren’t nearly as hap-

py. The most common complaint, one that really

stuck with me, was that there was “too much

math” in his solution. I wondered whether the two

equations found on slide 47 (shades of calculus!)

were to blame. But then I read Alva Couch’s article

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 2

(page 12) and realized that perhaps the real problem was something com-

pletely different. The real problem has to do with two things: a mindset, and

being stuck in the past.

The Mindset

Alva Couch explains something I have had difficulty understanding since I

first encountered the concept, way back when I was a college student. I

found languages such as FORTRAN and ALGOL easy to comprehend, but

LISP and APL unpleasant to use. I’ve recently learned that there is a “seman-

tic wall” between these two languages, to borrow from Alva. Using a more

modern example, the C language is bottom-up, or imperative. You write a

sequence of commands, and they are executed in order. Functional pro-

gramming languages, such as LISP or Haskell, work top-down, where the

entire program is a single expression. In a functional language, the expres-

sion describes the desired result without specifying how the result is de-

rived.

Now consider how system administration gets accomplished today. Some-

one requests a change to a service, and sysadmins go about changing the

configuration, an imperative operation. If something breaks, the sysadmins

set about uncovering the cause of the problem and adjusting the configura-

tion to solve the problem—again, a bottom-up approach.

What John Strassner, and Alva Couch, suggest requires a mindset that is

very different. Instead of acting imperatively, getting right into the nitty-grit-

ty of configuration editing, autonomics requires a more functional, top-

down approach. I believe that a lot of sysadmins will find this approach in-

imical to the way they have carried out their duties for their entire working

careers.

And thus the past, in which we do things the way they have always been

done, becomes an obstacle to a future where some things will need to be

done differently. We are really not that different from people riding horse-

drawn carriages in 1907 complaining about the noisy and dangerous horse-

less carriages.

Autonomic computing does not mean the end of understanding and editing

configuration files. It will mean that this task will consume less of the sysad-

min’s working day. I expect autonomics, in some form, will evolve, regard-

less of kicking, screaming, and temper tantrums or editorializing against its

adoption. And the people who develop autonomics may not be sysadmins

but researchers willing to take a top-down, instead of a bottom-up, ap-

proach.

So times change: either the world becomes more complicated, or it appears

more complicated because it now works differently. Remember, there are

still many people living in developed countries who do not use electronic

communication such as email, IM, and text messaging. Don’t get left behind.

The Lineup

I had often wondered about anycasting, so I contacted ISC and found Joe

Abley willing and able to describe the pros, cons, and sheer aggravation sur-

rounding the use of anycasting in IPv4. Anycasting is not a solution that

many can use, but I believe you should be aware of it.

Alva Couch follows with his article that examines mindsets, or the semantic

wall I also attempted to describe in this editorial. Learning more about the

;LOGIN: FEBRUARY 2008 MUSINGS 3

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 3

differences between imperative and functional programming languages is

not the point of Alva’s article; the example is just used to demonstrate what

he considers the crux of building real autonomic computing systems.

Eric Langheinrich next tells us about a method for controlling access to Web

content that relates to anti-spam techniques. Through the use of scripts and

a scoring service, you can configure your Web server to deny content to

crawlers looking for certain content, such as email addresses, to be used in

later UCE.

We next have two articles related to papers presented at LISA ’07. A group

from the University of Arizona describes Stork, a package management sys-

tem designed for use in clusters and PlanetLab. Once you have installed

your distributed applications, you can consider managing those applications

using Plush, the second in this set of articles.

Octave Orgeron then continues his tutorial on Solaris LDoms, with a focus

on advanced topics in network and disk configuration. He is followed by

Aditya Sood, who explains problems with XML signing.

We have a new columnist starting with this issue of ;login:. Peter Galvin,

longtime tutorial instructor at USENIX conferences as well as the Solaris

columnist for the now-defunct Sys Admin, has agreed to write about Solaris

for ;login:. I am happy to help provide a new home for Pete’s column and

hope that many of you will continue to enjoy reading it.

And, as mentioned, we have summaries of LISA ’07, as well as of four of the

workshops that occurred before the main conference began.

Starting with this issue, ;login: will include a cartoon courtesy of User-

Friendly. We are thankful to David Barton for allowing us to lighten up our

pages with some relevant humor.

Times are changing. But then times always change, and those changes often

prove upsetting and difficult even to consider, much less accommodate.

What sysadmins face today is an exploding number of computers, and com-

puter-enabled devices, that must be managed. We need to look toward new

technologies that will make managing these devices easier, even if the transi-

tion will be difficult. And I can’t imagine it will be easy.

REFERENCES

[1] LISA ’07 Technical Sessions: http://www.usenix.org/events/lisa07/tech/.

[2] Home page for IBM’s Autonomics project:

http://www.research.ibm.com/autonomic/index.html.

[3] Wikipedia page, with more links about autonomics at the bottom:

http://en.wikipedia.org/wiki/Autonomic_Computing.

4 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 4

;LOGIN: FEBRUARY 2008 FEAR AND LOATHING IN THE ROUTING SYSTEM 5

JOE ABLEY

fear and loathing

in the routing

system

Joe Abley is the Director of Operations at Afilias

Canada, a DNS registry company, and a technical

volunteer at Internet Systems Consortium. He likes

his coffee short, strong, and black and is profoundly

wary of outdoor temperatures that exceed 20°C.

ANYCAST IS A STRANGE ANIMAL. IN

some circles the merest mention of the

word can leave you drenched in bile; in oth-

ers it’s an overused buzzword which trig-

gers involuntary rolling of the eyes. It’s a

technique, or perhaps a tool, or maybe a re-

volting subversion of all that is good in the

world. It is “here to stay.” It is by turns “use-

ful” and “harmful”; it “improves service sta-

bility,” “protects against denial-of-service at-

tacks,” and “is fundamentally incompatible

with any service that uses TCP.”

That a dry and, frankly, relatively trivial routing

trick could engender this degree of emotional out-

pouring will be unsurprising to those who have

worked in systems or network engineering roles

for longer than about six minutes. The violently di-

vergent opinions are an indication that context

matters with anycast more than might be immedi-

ately apparent, and since anycast presents a very

general solution to a large and varied set of poten-

tial problems, this is perhaps to be expected.

The trick to understanding anycast is to concen-

trate less on the “how” and far more on the “why”

and “when.” But before we get to that, let’s start

with a brief primer. Those who are feeling a need to

roll their eyes already can go and wait outside. I’ll

call you when this bit is done.

Nuts and Bolts

Think of a network service which is bound to a

greater extent than you’d quite like to an IP address

rather than a name. DNS and NTP servers are good

examples, if you’re struggling to paint the mental

image. Renumbering servers is an irritating process

at the best of times, but if your clients almost al-

ways make reference to those servers using hard-

coded IP addresses instead of names, the pain is far

greater.

Before the average administrator has acquired

even a small handful of battle scars from dealing

with such services, it’s fairly common for the ser -

vices to be detached from the physical servers that

house them. If you can point NTP traffic for

204.152.184.72 at any server you feel like, moving

the corresponding service around as individual

servers come and go becomes trivially easy. The IP

address in this case becomes an identifier, like a

DNS name, detached from the address of the server

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 5

that happens to be running the server processes on this particular afternoon.

With this separation between service address and server address, a smooth

transition of this NTP service from server A to server B within the same net-

work is possible with minimal downtime to clients. The steps are:

1. Make sure the service running on both servers is identical. In the case

of an NTP service, that means that both machines are running

appropriate NTP software and that their clocks are properly

synchronized.

2. Add a route to send traffic with destination address 204.152.184.72

toward server B.

3. Remove the route that is sending traffic toward server A.

Ta-da! Transition complete. Clients didn’t notice. No need for a mainte-

nance window. Knowing smiles and thoughtful nodding all round.

To understand how this has any relevance to the subject at hand, let’s insert

another step into this process:

2.5. Become distracted by a particularly inflammatory slashdot comment,

spend the rest of the day grumbling about the lamentable state of the

server budget for Q4, and leave the office at 11 p.m. as usual,

forgetting all about step 3.

The curious result here is that the end result might very well be the same:

Clients didn’t notice. There is no real need for a maintenance window.

What’s more, we can now remove either one of those static routes and turn

off the corresponding server, and clients still won’t notice. We have distrib-

uted the NTP service across two origin servers using anycast. And we didn’t

even break a sweat!

Why does this work? Well, a query packet sent to a destination address ar-

rives at a server which is configured to accept and process that query, and

the server answers. Each server is configured to reply, and the source ad-

dress used each time is the service address. The fact that there is more than

one server available doesn’t actually matter. To the client (and, in fact, to

each server), it looks like there is only one server. The query-response be-

havior is exactly as it was without anycast on the client and on the server.

The only difference is that the routing system has more than one choice

about toward which server to send the request packet.

(To those in the audience who are getting a little agitated about my use of a

stateless, single-packet exchange as an example here, there is no need to

fret. I’ll be pointing out the flies in the ointment very soon.)

The ability to remove a dependency on a single server for a service is very at-

tractive to most system administrators, since once the coupling between

service and server has been loosened, intrusive server maintenance without

notice (and within normal working hours) suddenly becomes a distinct pos-

sibility. Adding extra server capacity during times of high service traffic

without downtime is a useful capability, as is the ability to add additional

servers.

For these kinds of transitions to be automatic, the interaction between the

routing system and the servers needs to be dynamic: that is, a server needs

to be able to tell the routing system when it is ready to receive traffic des-

tined for a particular service, and correspondingly it also needs to be able to

tell the routing system when that traffic should stop. This signaling can be

made to work directly between a server and a router using standard routing

protocols, as described in ISC-TN-2004-1 [1] (also presented at USENIX ’04

[2]). This approach can also be combined with load balancers (sometimes

6 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 6

called “layer-4 switches”) if the idea of servers participating in routing pro-

tocols directly is distasteful for local policy reasons.

This technique can be used to build a cluster of servers in a single location

to provide a particular service, or to distribute a service across servers that

are widely distributed throughout your network, or both. With a little extra

attention paid to addressing, it can also be used to distribute a single service

around the Internet, as described in ISC-TN-2003-1 [3].

Anycast Marketing

Some of the benefits to the system administrator of distributing a service us-

ing anycast have already been mentioned. However, making the lives of sys-

tem administrators easier rarely tops anybody’s quarterly objectives, much

as you might wish otherwise. If anycast doesn’t make the service better in

some way, there’s little opportunity to balance the cost of doing it.

So what are the tangible synergies? What benefits can we whiteboard proac-

tively, moving forward? Where are the bullet points? Do you like my tie? It’s

new!

Distributing a service around a network has the potential to improve service

availability, since the redundancy inherent in using multiple origin servers

affords some protection from server failure. For a service that has bad failure

characteristics (e.g., a service that many other systems depend on) this

might be justification enough to get things moving.

Moving the origin server closer to the community of clients that use it has

the potential to improve response times and to keep traffic off expensive

wide-area links. There might also be opportunities to keep a service running

in a part of your network that is afflicted by failures in wide-area links in a

way that wouldn’t otherwise be possible.

For services deployed over the Internet, as well as nobody knowing whether

you’re a dog, there’s the additional annoyance and cost of receiving all kinds

of junk traffic that you didn’t ask for. Depending on how big a target you

have painted on your forehead, the unwanted packets might be a constant

drone of backscatter, or they might be a searing beam of laser-like pain that

makes you cry like a baby. Either way, it’s traffic that you’d ideally like to

sink as close to the source as possible, ideally over paths that are as cheap as

possible. Anycast might well be your friend.

Flies in the Ointment

The architectural problem with anycast for use as a general-purpose service

distribution mechanism results from the flagrant abuse of packet delivery

semantics and addressing that the technique involves. It’s a hack, and as

with any hack, it’s important to understand where the boundaries of normal

operation are being stretched.

Most protocol exchanges between clients and servers on the Internet involve

more than one packet being sent in each direction, and most also involve

state being retained between subsequent packets on the server side. Take a

normal TCP session establishment handshake, for example:

1. Client sends a SYN to a server.

2. Server receives the SYN and replies with a SYN-ACK.

3. Client receives the SYN-ACK and replies with an ACK.

4. Server receives the ACK, and the TCP session state on both client and

server is “ESTABLISHED.”

;LOGIN: FEBRUARY 2008 FEAR AND LOATHING IN THE ROUTING SYSTEM 7

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 7

This exchange relies on the fact that “server” is the same host throughout

the exchange. If that assumption turns out to be wrong, then this happens:

1. Client sends a SYN to server A.

2. Server A receives the SYN and replies with a SYN-ACK.

3. Client receives the SYN-ACK and replies to the service address with an

ACK.

4. Server B receives the ACK and discards it, because it has no

corresponding session in “SYN-RECEIVED.”

At the end of this exchange, the client is stuck in “SYN-SENT,” server A is

stuck in “SYN-RECEIVED,” and server B has no session state at all. Clearly

this does not satisfy the original goal of making things more robust; in fact,

under even modest query load from perfectly legitimate clients, the view

from the servers is remarkably similar to that of an incoming SYN flood.

It’s reasonable to wonder what would cause packets to be split between

servers in this way, because if that behavior can be prevented perhaps the

original benefits of distributed services that gave us all those warm fuzzies

can be realized without inadvertently causing our own clients to attack us.

The answer lies in the slightly mysterious realm of routing.

The IP routing tables most familiar to system administrators are likely to be

relatively brief and happily uncontaminated with complication. A single de-

fault route might well suffice for many hosts, for example; the minimal size

of that routing table is a reflection of the trivial network topology in which

the server is directly involved. If there’s only one option for where to send a

packet, that’s the option you take. Easy.

Routers, however, are frequently deployed in much more complicated net-

works, and the decision about where to send any particular packet is corre-

spondingly more involved. In particular, a router might find itself in a part

of the network where there is more than one viable next hop toward which

to send a packet; even with additional attributes attached to individual

routes, allowing routers to prioritize one routing table entry over another,

there remains the distinct possibility that a destination address might be

reached equally well by following any one of several candidate routes. This

situation calls for Equal-Cost Multi-Path (ECMP) routing.

Without anycast in the picture, so long as the packets ultimately arrive at

the same destination, ECMP is probably no cause for lost sleep. If the desti-

nation address is anycast, however, there’s the possibility that different can-

didate routes will lead to different servers, and therein lies the rub.

Horses for Courses

So, is anycast a suitable approach to making services more reliable? Well,

yes and no. Maybe. Maybe not, too. Oh, it’s all so vague! I crave certainty!

And caffeine-rich beverages!

The core difficulty that leads to all this weak hand-waving is that it’s very

difficult to offer a general answer when the topology of even your own net-

work depends on the perspective from which it is viewed. When you start

considering internetworks such as, well, the Internet, the problem of formu-

lating a useful general answer stops being simply hard and instead becomes

intractable.

From an architectural perspective, the general answer is that for general-

purpose services and protocols, anycast doesn’t work. Although this is

mathematically correct (in the sense that the general case must apply to all

possible scenarios), it flies in the face of practical observations and hence

8 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 8

doesn’t really get us anywhere. Anycast is used today in applications ranging

from the single-packet exchanges of the DNS protocol to multi-hour,

streaming audio and video. So it does work, even though in the general case

it can’t possibly.

The fast path to sanity is to forget about neat, simple answers to general

questions and concentrate instead on specifics. Just because anycast cannot

claim to be generally applicable doesn’t mean it doesn’t have valid applica-

tions.

First, consider the low-hanging fruit. A service that involves a single-packet,

stateless transaction is most likely ideally suited to distribution using any-

cast. Any amount of oscillation in the routing system between origin servers

is irrelevant, because the protocol simply doesn’t care which server process-

es each request, so long as it can get an answer.

The most straightforward example of a service that fits these criteria is DNS

service using UDP transport. Since the overwhelming majority of DNS traf-

fic on the Internet is carried over UDP, it’s perhaps unsurprising to see any-

cast widely used by so many DNS server administrators.

As we move on to consider more complicated protocols—in particular, pro-

tocols that require state to be kept between successive packets—let’s make

our lives easy and restrict our imaginings to very simple networks whose be-

havior is well understood. If our goal is to ensure that successive packets

within the same client-server exchange are carried between the same client

and the same origin server for the duration of the transaction, there are

some tools we can employ.

We can arrange for our network topology to be simple, such that multiple

candidate paths to the same destination don’t exist. The extent to which this

is possible might well depend on more services than just yours, but then the

topology also depends to a large extent on the angle you view it from. It’s

time to spend some time under the table, squinting at the wiring closet. (But

perhaps wait until everybody else has gone home, first.)

We can choose ECMP algorithms on routers that have behavior consistent

with what we’re looking for. Cisco routers, for example, with CEF (Cisco

Express Forwarding) turned on, will hash pertinent details of a packet’s

header and divide the answer space by the number of candidate routes avail-

able. Other vendors’ routers have similar capabilities. If the computed hash

is in the first half of the space, you choose the left-hand route; if the answer

is in the other half, you choose the right-hand route. So long as the hash is

computed over enough header variables (e.g., source address and port, des-

tination address and port) the route chosen ought to be consistent for any

particular conversation (“flow,” in router-ese).

When it comes to deploying services using anycast across other people’s net-

works (e.g., between far-flung corners of the Internet), there is little certain-

ty in architecture, topology, or network design and we need instead to con-

centrate our thinking in terms of probability: We need to assess benefit in

the context of risk.

Internet, n: “the largest equivalence class in the reflexive transitive

symmetric closure of the relationship ‘can be reached by an IP packet

from’’ (Seth Breidbart).

The world contains many hosts that consider themselves connected to the

Internet. However, that “Internet” is different, in general, for every host—it’s

a simple truism that not all the nodes in the world that believe themselves to

be part of “the” Internet can exchange packets with each other, and that’s

even without our considering the impact of packet filters and network ad-

;LOGIN: FEBRUARY 2008 FEAR AND LOATHING IN THE ROUTING SYSTEM 9

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 9

dress translation. The Internet is a giant, seething ball of misconfigured

packet filters, routing loops, and black holes, and it’s important to acknowl-

edge this so that the risks of service deployment using anycast can be put

into appropriate context.

A service that involves stateful, multi-packet exchanges between clients and

servers on the Internet, deployed in a single location without anycast, will

be unavailable for a certain proportion of hosts at any time. You can some-

times see signs of this in Web server and mail logs in the case of asymmetric

failures (e.g., sessions that are initiated but never get established); other fail-

ure modes might relate to control failures (e.g., the unwise blanket denial of

ICMP packets in firewalls which so often breaks Path MTU Discovery). In

other cases the unavailability might have less mysterious origins, such as a

failed circuit to a transit provider which leaves an ISP’s clients only able to

reach resources via peer networks.

Distributing the same service using anycast can eliminate or mitigate some

of these problems, while introducing others. Access to a local anycast node

via a peer might allow service to be maintained to an ISP with a transit fail-

ure, for example, but might also make the service vulnerable to rapid chang -

es in the global routing system, which results in packets from a single client

switching nodes, with corresponding loss of server-side state. At layer-9,

anycast deployment of service might increase costs in server management,

data center rental, shipping, and service monitoring, but it might also dra-

matically reduce Internet access charges by shifting the content closer to the

consumer. As with most real-life decisions, everything is a little grey, and

one size does not fit all.

Go West, Young Man

So, suppose you’re the administrator of a service on the Internet. Your tech-

nical staff have decided that anycast could make their lives easier, or perhaps

the pointy-haired guy on the ninth floor heard on the golf course that any-

cast is new and good and wants to know when it will be rolled out so he can

enjoy his own puffery the next time he’s struggling to maintain par on the

eighth hole. What to do?

First, there’s some guidance that was produced in the IETF by a group of

contributors who have real experience in running anycast services. That the

text of RFC 4786 [4] made it through the slings and arrows of outrageous

run-on threads and appeals through the IETF process ought to count for

something, in my opinion (although as a co-author my opinion is certainly

biased).

Second, run a trial. No amount of theorizing can compete with real-world

experience. If you want to know whether a Web server hosting images can

be safely distributed around a particular network, try it out and see what

happens. Find some poor victim of the slashdot effect and offer to host her

page on your server, and watch your logs. Grep your netstat -an and look for

stalled TCP sessions that might indicate a problem.

Third, think about what problems anycast could introduce, and consider

ways to minimize the impact on the service or to provide a fall-back to allow

the problems to be worked around. If your service involves HTTP, consider

using a redirect on the anycast-distributed server that directs clients at a

non-anycast URL at a specific node. Similar options exist with some stream-

ing media servers. If you can make the transaction between clients and the

anycast service as brief as possible, you might insulate against periodic rout-

ing instability that would be more likely to interrupt longer sessions.

10 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 10

Fourth, consider that there are some combinations of service, protocol, and

network topology that will never be good environments for anycast to work.

Anycast is no magic wand; to paraphrase the WOPR [5], sometimes the only

way to win is not to play.

REFERENCES

[1] J. Abley, “A Software Approach to Distributing Request for DNS Service

Using GNU Zebra, ISC BIND9 and FreeBSD,” ISC-TN-2004-1, March 2004:

http://www.isc.org/pubs/tn/isc-tn-2004-1.html.

[2] USENIX Annual Technical Conference (USENIX ’04) report, ;login:,

October 2004, page 52.

[3] J. Abley, “Hierarchical Anycast for Global Service Distribution,” ISC-TN-

2003-1, March 2003: http://www.isc.org/pubs/tn/isc-tn-2003-1.html.

[4] J. Abley and K. Lindqvist, “Operation of Anycast Services,” RFC 4786,

December 2006.

[5] “War Operation Plan Response”: http://en.wikipedia.org/wiki/WarGames.

;LOGIN: FEBRUARY 2008 FEAR AND LOATHING IN THE ROUTING SYSTEM 11

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 11

12 ;LOGIN: VOL. 33, NO. 1

ALVA COUCH

From x=1 to (setf x 1):

what does configuration

management mean?

A SYSTEM ADMINISTRATION

RESEARCHER CONSIDERS LES-

SONS LEARNED FROM LISA ’07,

INCLUDING THE RELATIONSHIP

BETWEEN CONFIGURATION

MANAGEMENT AND AUTO -

NOMIC COMPUTING

Alva Couch is an Associate Professor of Computer Sci-

ence at Tufts University, where he and his students

study the theory and practice of network and system

administration. He served as Program Chair of

LISA ’02 and was a recipient of the 2003 SAGE Out-

standing Achievement Award for contributions to

the theory of system administration. He currently

serves as Secretary of the USENIX Board of Directors.

[email protected]ts.edu

THE CONFIGURATION MANAGEMENT

workshop this year at LISA brushed against

autonomic configuration management, but

as usual “there were no takers.” The lessons

of autonomic control in network manage-

ment (also called “self-managing systems”)

seemed far removed from practice, “some-

thing to think about 10 years from now.”

Meanwhile, many talks throughout the con-

ference (including the keynote, a guru ses-

sion, and several technical papers) dis-

cussed automatic management mecha-

nisms, although some speakers stopped

short of calling these “self-managing” or

“autonomic.” Autonomics were almost a

theme. But, in my opinion, these speakers

made few converts. I stopped to think about

why this is true, and I think I have a simple

explanation. It’s all about meaning.

The meaning crisis that system administrators face

is very similar to the crisis of meaning that plagued

the programming languages community in the

past: There is a difference in semantics between do-

ing things autonomically and doing things via tra-

ditional configuration management. “Semantics”

refers to “what things mean.” The difference is so

small, and yet so profound, that the community is

not fully aware of it. But it places so crippling a

wall between autonomics and traditional configu-

ration management that it is worthy of comment in

itself.

Operational and Axiomatic Semantics

In programming languages, there is a “semantic

wall” between statically typed languages such as C

and dynamically typed languages such as LISP. The

difference between these languages seems small

but is actually profound. The meaning of a C pro-

gram is easily defined in terms of the operations of

the base machine. This is called operational seman-

tics. By contrast, the interactions between a LISP

interpreter and the base machine are not useful to

understand. Instead, one expresses the meaning of

statements via axiomatic semantics: a mathemati-

cal description of the observable behavior resulting

from executing statements, without reference to

the underlying way in which statements are actual-

ly implemented.

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 12

To understand this subtlety, consider the difference between the semantics

of the C statement x=1 and the LISP statement (setf x 1). For x=1 there is an

empowering operational (also called “bottom-up”) semantic model that

“there is a cell named X into which the value 0x00000001 is written.” The

operational semantics of (setf x 1), however, are not particularly empower-

ing. There is a symbol named x that is created in a symbol table (indexed by

name), and there is a numeric atom containing the value 1, and those are as-

sociated via the property “symbol-value” of the symbol x. At a deeper level,

index trees become involved. But those facts about the LISP version of x are

not important and not empowering except to people developing LISP. The

axiomatic (“top-down”) equivalent for the meaning of this statement is that

“after (setf x 1), the symbol x refers to the atom 1.” The details of implemen-

tation are stripped, and only the valuable functional behavior is left.

The Semantic Wall of Configuration Management

We now face a similar semantic wall between systems that exhibit “auto-

nomic” behaviors and systems that “automate” configuration management.

The latter utilize operational semantics (like x=1), whereas the former uti-

lize axiomatic semantics (like (setf x 1)). This difference may seem unimpor-

tant, but it is central enough to cripple the discipline.

Current configuration management tools such as BCFG2, Puppet, and

Cfengine utilize an operational semantic model similar to that of x=1 in C.

The “meaning” of each tool’s input is “what it does to the configurations of

machines.” Regardless of how data is specified, its final destination in a spe-

cific configuration file or files is what it “means.” For example, regardless of

the way in which one specifies an Internet service, one knows that it must

end up as an entry in /etc/xinetd.conf or a file in /etc/xinetd.d; its “meaning”

is defined in terms of that final positioning within the configuration of the

machine.

By contrast, autonomic systems are configured via axiomatic semantics; the

parameters specified have no direct relationship to the actual contents of

files on a machine, nor is the understanding of that correspondence impor-

tant or empowering, because the relationship between the parameter and

the realization of that parameter (in terms of the behavior that it engenders

or encourages) is too complex to be useful. For example, a specification that

“the Web server must have a response time less than 2 seconds for each re-

quest” has little to do with the actual identity of the Web server or how that

result might be achieved. In a very deep sense, that information is not useful

in understanding the objective.

To utilize autonomics effectively, we need to progress from a semantic model

in which x=1 is defined operationally to a semantic model in which (setf x 1)

is defined axiomatically. This was a big step in programming languages and

is an equally daunting step in configuration management. But, as I will ex-

plain, not only do current tools not contribute to that progress, they actually

work actively against it, by reinforcing practices that entrench us needlessly

in operational semantics and distance us from the potential for axiomatic

meaning.

Abstraction and Meaning

Current approaches to configuration management, such as Cfengine,

BCFG2, and Puppet, attempt to close the gap via what some authors call

“raising the level of abstraction” at which one specifies configuration. How-

;LOGIN: FEBRUARY 2008 FROM X=1 TO (SETF X 1) 13

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 13

ever, simply raising the level of abstraction cannot scale the semantic wall

between operation and behavior. One hard lesson of programming language

semantics is that it is not just necessary to “abstract” upward from the ma-

chine; one must also create a model of behavior (in an axiomatic sense) with

which one can reason at a high level, and with simpler semantic properties

than the full operational model. Simply raising the level of abstraction does

not automatically create any model other than the existing operational mod-

el of “bits on disk.” Without an empowering semantic model, it is no easier

to reason about a high-level description based upon operational semantics

than it is to reason about a low-level description of the same thing.

Authors of configuration management tools frequently wonder why the lev-

el of adoption of their tools is so low. The answer, I think, lies in this issue of

semantics. The tools do not “make things easier to understand”; they make

things that remain difficult to understand easier to construct. No matter

how skillfully one learns to use the tool, one is committed to an operational

semantic model, in which one must still understand what “bits on disk”

mean in order to understand what a tool does. The tool thus represents

“something extra to learn” rather than managing “something that one can

afford to forget.”

There is no doubt that current tools save much work and raise the maturity

level of a site but, alas, they fail to make the result easier to understand. It

is thus not surprising that less experienced administrators with much left

to learn about “bits on disk” shy away from having to learn even more than

before. If configuration management represents “something else to learn”

rather than “something easier to master,” it is no surprise that use of config-

uration management tools finishes dead last in priority among inexperi-

enced system administrators. If tools are to become attractive, they must

represent “less to learn” rather than “more to learn.”

Modeling Behavior

A successful model of configuration semantics would allow one to avoid ir-

relevant detail and concentrate on important details. The gulf between “au-

tomated” and “autonomic” configuration management is so great, however

(like the gulf between C and the logic-programming language Prolog), that

some intermediate semantics (e.g., those in LISP) might help. If, as well,

this intermediate semantics is straightforward enough to be empowering,

then we have a semantic layer we can use to bridge between “automatic”

and “autonomic” models. The current semantics has the character of C’s

x=1, where as this intermediate semantics might have the character of LISP’s

(setf x 1).

Consider, for example, the intermediate semantics of file service. The “bot-

tom-up” semantic model of this is that what one writes into configuration

files leads to some fixed binding between each client (embodied as a ma-

chine) and some file server. The “top-down” model of file service can be ex-

pressed in a much simpler way. There is some “service” (that potentially can

move from server to server) and some “pool of clients” (that must share the

same service), but there is no binding between that service and a particular

server, because that would be irrelevant to specifying the goal of providing

that service. Instead, there is an expectation of service behavior that is miss-

ing from the bottom-up semantic model. That behavioral objective is that

whatever file a user writes to the service is persistent across any kind of net-

work event or contingency, and it can be recovered later by reading it back

from the service via the same pathname. The way that this behavioral objec-

tive is met is not central to reasoning about the requirement. The objective

14 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 14

is not a property of a specific machine, but of the management process itself;

if the server changes, the file still (hopefully) persists, as much by the ac-

tions of human administrators (in recovering it from backups) as by the ac-

tions of software.

By contrast, the bottom-up model of file service is limiting in simple but

profound ways. Saying “server X should provide Network File System (NFS)

service to client Y” (or even “some server in a pool P should provide NFS to

client Y”) is similar to saying X=1. There are implicit limiting assumptions

about how this might be done. In particular, we have implicitly decided in

the former statement that NFS is the objective rather than a means toward

an objective. A file service is semantically very much like (setf x 1), in which,

by some unspecified method, two operations to which we refer as “write”

(such as executing (setf x 1) in LISP) and “read” (analogous to referencing x

after the setf) have consistent behaviors. This model is simple, but NFS is

relatively complex, and there are many ways of assuring this kind of behav-

ior other than by using NFS.

An axiomatic model of file service thus differs drastically from the opera-

tional model. The entities are not machines, but users, and the axiomatic

formulation is that, for each user, writing content to a path results in that

content being available henceforth via that path. For simplicity, we might

notate this “behavioral axiom” as:

User –(Path:Content)-> filesystem

to mean that for an entity that is a “User,” interactions with the entity

“filesystem” comprise associating a “Path” with “Content” and being able to

retrieve that content via that path. “User,” “Path,” and “Content” are types

that refer to sets of potential entities, whereas “filesystem” is an entity. The

arrow represents a dominance relationship, in which any entity of type

“User” is dominant in creating content; “filesystem” is subservient in

recording and preserving that relationship.

Modeling Services

One advantage of such a model is that many details that are purely imple-

mentation drop out of the model. The most important facet of a DHCP rela-

tionship between server and client is that the server specifies the address of

the client:

DHCP –(MAC:IPaddress)-> Client

whereas the client is accessible through that address. There are many ways

of assuring the latter, but one of the more common is “dynamic DNS,” in

which:

DHCP –(Name:IPaddress,IPaddress:Name)->DNS

This means that DHCP specifies the name-IP mapping to DNS in accordance

with its data on active clients. DNS returns this to the clients via:

DNS –(Name:IPaddress,IPaddress:Name)->Client

This means that a client asking for an “IPaddress” for a “Name” or a “Name”

for an “IPaddress” gets the one that DHCP specified originally. The “Name”

to “MAC” mapping is specified by an administrator, e.g.:

Administrator->(MAC:Name)->DHCP

These are all dominance relationships very much like the one that describes

file service.

;LOGIN: FEBRUARY 2008 FROM X=1 TO (SETF X 1) 15

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 15

This level of detail is independent of irrelevant detail, such as how this map-

ping is accomplished. Caching, timeouts, and formats of mappings are (at

this level) irrelevant details. The important details include dominance rela-

tionships and behavioral predictions, including that the address assigned by

DHCP is indeed the address by which the host can be located via DNS.

The beauty of this scheme is that we describe “how things should work” but

not “how this behavior is assured.” The former is empowering; the latter is

more or less irrelevant if our tools understand the former. But current tools

do not understand the former; neither can they assure this behavior without

a lot of help from human beings.

Promises, Promises . . .

The astute reader will realize that this notation is very similar to that of

promise theory, and the even more astute reader will realize that promise

theory does not include a globally valid semantic model. Promises are a con-

cept introduced by Mark Burgess to provide a simple framework for model-

ing interactions between agents during configuration management. A prom-

ise is a declaration of behavioral intent, whose semantic interpretation is up

to the individual agent receiving each promise. A promise between agents

assumes as little as possible about behavior, while at the same time being as

clear as possible about the intention of the promise. The “type” of a promise

is a starting point for the agent’s determination of the promise’s “meaning,”

which is an emergent property of the promise, tempered by local observa-

tion by the receiver of its validity or lack thereof.

My notation, by contrast, globally defines expected interactions and their re-

sults. Promises enable local interactions, whereas the notation here attempts

to describe overarching intent. Thus my semantics may look as though it

describes promises but, because it describes intent as a global invariant, it

is not like promises at all. Promise theory is one level up from my model in

complexity, in not assuming that agents can be trusted to cooperate.

Coming to Closure . . .

In like manner, anything that implements the semantics of my notation is a

closure, in the sense that it exhibits semantic predictability based upon an

exterior description of behavior. This is how “closure” is defined.

It is well documented that building a closure is difficult and requires chang -

es in the way we think about and notate a problem, but so is building a LISP

interpreter in C, and we managed to do that. Most of the difficulties inher-

ent in both tasks (building a closure or building a LISP interpreter) lies in

letting go of lower-level details and scaling the semantic wall without look-

ing back or down.

This is what we currently cannot bring ourselves to do.

And, because this is exactly what autonomic tools do, we are setting our-

selves up for a rude awakening in which our tools and practices lag far be-

hind the state of the art.

Science, Engineering, or Sociology?

We, our tools, and our practices are faced with a semantic wall. On one side

of the wall lie operational semantics. On the other side lie axiomatic seman-

tics. We have two choices: Scale that wall ourselves or let someone else scale

16 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 16

it for us. If we sit still and let others do the climbing, that climbing will be

done by systems engineers who understand little of the human part of sys-

tem administration. If we instead take an active role, higher-level semantics

can evolve in accordance with our human needs as system administrators, in

addition to the needs of our organizations.

And the way I think we can take an active role may be somewhat surprising.

One can take a role in this revolution even if one uses no tools and does

everything by hand!

The Power of Commonality

It is easy to forget that the widely accepted Common LISP standard was pre-

ceded by a plethora of relatively uncommon LISPs. There are a million dif-

ferent ways to create a LISP language that conforms to the LISP axioms for

behavior. But there aren’t currently a million LISP implementations to match

these interpretations, because high-level semantics become more useful if

there is one unique way to describe their meanings in operational terms.

Even though the operational semantics of LISP are not particularly easy for

the novice to grasp, these same semantics give the expert a strong and uni-

versally shared semantic model that aids in performance tuning and in de-

bugging of the interpreter itself. If one person fixes a bug in this common

model, everyone using the model benefits from the fix.

This is a hard fact for the typical system administrator to swallow. We pride

ourselves in molding systems in our own images. We locate files where we

can find them, and we structure documentation according to personal taste.

This all comes with “being the gods of the machine,” as one system adminis-

trator put it. Our tools, molded in our images, support and enforce the view

that customization and molding systems to our own understandings is a

necessary part of management.

It is not.

There are, in my mind, roughly three levels of maturity for a system admin-

istrator:

■

Managing a host

■

Managing a network

■

Managing business process and lifecycle

As one matures, one gradually understands and adopts practices with in-

creasingly long-term benefits of a broader view. But even at the highest level

of maturity in this model, one is not done. There remains another level of

understanding and achievement:

■

Managing the profession

Managing the profession entails doing things as part of one’s practice that

benefit all system administrators, and not just the administrators at one’s

own site.

It would have been easy to allow LISP to “fragment” into many languages, at

no cost to the individual programmer. There would have been, though, a

cost to the profession if there were 100 LISPs. It would have limited sharing

and would have stifled development.

But this is exactly the juncture where we sit with configuration management

now. There are a million ways to assure behavior, and everyone has a differ-

ent way. Our tools support and encourage this divergence. It is like having a

million different LISPs with the same axiomatic semantics and different im-

plementations, for no particularly good reason!

;LOGIN: FEBRUARY 2008 FROM X=1 TO (SETF X 1) 17

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 17

In other words, a semantic model is not enough to take system administra-

tion to the next level. That model must also be shared and common, and it

must refer to and be implemented via shared base semantics.

To raise the level of modeling, it is necessary to do the following:

■

Avoid incidental complexity and incidental variation.

■

Seek shared standards.

■

Evolve a common semantic base from those standards.

■

Incorporate best practices in that base.

The end product of this process is a set of shared standards that form a com-

mon semantic base that tools can implement and support.

What does this mean to you? It is really simple: If something hurts the pro-

fession, stop doing it. One example of a hurtful practice is our arbitrarily

differing ways for assuring behavior. We place our personal need to remem-

ber details over the professional need for standards and consistency.

■

There are millions of ideas for where packages and files should be lo-

cated in a running system. Let’s all choose one and stick with it!

■

There are millions of ways to configure services, all of which accom-

plish the same thing. Let’s choose one of these.

■

Life is much simpler if, for example, we choose as a profession to run

each service on an independent virtual server.

■

Let us endeavor to leave every system in a state any other professional

can understand.

Let us utilize our tools not for divergence, but for convergence to a common

standard for providing and maintaining services that is so strong in seman-

tics that we can forget the underlying details and “close the boxes.” Let us

support each other in protecting those standards against deviations that fos-

ter personal rather than professional objectives. If there is exactly one “best

way” to provide a service, then we can all use that way, and the “institutional

memory” of the profession as a whole becomes smaller and more manage-

able.

Will this ever happen? That is not a question of science, but one of sociolo-

gy. Tool builders build their careers (and livelihoods) by encouraging adop-

tion of “their personal views” on semantic intent. Meanwhile, the tools we

have available for configuration management are still at the x=1 stage. One

can throw abstraction at a problem—without semantics—and the intrinsic

difficulty of the problem does not change. Only when we can define func-

tion based upon the abstraction, rather than upon its realization, can we

move beyond abstraction to a workable semantics for configuration in

which the internals of the configuration process become unimportant, as

they rightly deserve to be.

This will be hard work, socially and technically, but the end product will be

a profession whose common mission is to make all networks sing.

18 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 18

;LOGIN: FEBRUARY 2008 HTTP:BL 19

ERIC LANGHEINRICH

http:BL: taking

DNSBL beyond

SMTP

Eric Langheinrich is CTO and Co-founder of Unspam

Technologies, Inc., and an expert in the field of detec-

tion and identification of malicious network activity.

Eric and his team at Unspam pioneered the Project

Honey Pot.

FOR MANY YEARS, EMAIL RECIPIENTS

have benefited from the use of various Do-

main Name Blacklists (DNSBLs) in the fight

against spam. Through efficient DNS look -

ups, mail servers can check individual con-

necting clients against various black lists.

Major DNSBLs include SpamHaus, SORBS,

SURBL, and MAPS. These DNSBLs provide

mail servers with the ability to decide how

client requests are handled from hosts

based on individual blacklist criteria. Hosts

are able to decide to block requests, allow

requests, or perform extra spam filtering

scrutiny on messages from hosts based on

results from blacklist lookups.

Mail servers, however, are not the only network re-

source that needs to be protected from malicious

machines. Web servers face a constant assault from

malicious Web robots that are harvesting email ad-

dresses, looking for exploits, and posting comment

spam. At the most basic level, these malicious ro-

bots rob a site of significant bandwidth. More im-

portantly, by allowing these robots to troll your site

you open yourself to the possibility of future at-

tacks via spam or Web-based vulnerabilities.

Project Honey Pot’s (www.projecthoneypot.org)

new http:BL service is similar to a traditional mail

server DNSBL, but it is designed for Web traffic

rather than mail traffic. The data provided through

the service empowers Web site administrators, for

the first time, to choose what traffic is allowed onto

their sites. By stopping malicious robots before

they can access a Web site, the http:BL service is

designed to save bandwidth, reduce online threats,

and decrease the volume of spam sent to the gate-

way by preventing spammers from getting email

addresses in the first place.

Each day, thousands of robots, crawlers, and spi-

ders troll the Web. Web site administrators have

few resources available to tell whether a visitor to a

site is good or malicious. Project Honey Pot was

created as an open community to provide this in-

formation to Web site administrators, enabling

them to make informed decisions on whom to al-

low onto their sites.

Project Honey Pot is a distributed network of de-

coy Web pages that Web site administrators can in-

clude on their sites to gather information about ro-

bots, crawlers, and spiders. The project collates

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 19

20 ;LOGIN: VOL. 33, NO. 1

data on harvesters, spammers, dictionary attackers, and comment spammers

and makes this data available to its members to help them protect their Web

sites and inboxes.

Web site administrators who want to participate in providing data to Project

Honey Pot do so by installing a script on their site. Web site administrators

include hidden links on their existing pages to the honeypot script. The

links are designed to be hidden from human visitors but followed by robots.

The honeypot script, when accessed, produces a Web page. Hidden on the

page are trap elements, including unique email addresses and Web forms. If

information is sent to these trap elements, then it is recorded by Project

Honey Pot and included in the http:BL. Scripts are published open source

and are currently available for PHP, Perl, ASP, Ruby, ColdFusion, and SAP

NetWeaver.

Currently, Project Honey Pot has tens of thousands of installed honeypots

and members in over 114 countries spanning every continent but Antarcti-

ca. Members can also participate in the project by “donating” MX records

from their domains to the project. Donated MXs extend the network, allow-

ing Project Honey Pot to track spam servers and dictionary attackers. Do-

nated domains allow Project Honey Pot to generate a virtually unlimited

number of spam trap email addresses that are difficult to detect. Together,

these resources help gather information on malicious Web robots.

The http:BL service makes this data available to any member of Project Hon-

ey Pot in an easy and efficient way. To use http:BL, a host need simply per-

form a DNS lookup of a Web visitor’s reverse IP address against one of the

http:BL DNS zones. Then http:BL’s DNS system will return a value that indi-

cates the status of the visitor. Visitors may be identified as search engines,

suspicious, harvesters, comment spammers, or a combination thereof. The

response to the DNS query indicates what type of visitor is accessing the

Web site, the threat level of the visitor, and how long it has been since the

visiting IP was last seen on the Project Honey Pot network.

Each user of http:BL is required to register with Project Honey Pot. Each

user of http:BL must also request an Access Key to make use of the service.

All Access Keys are 12 characters in length, are lowercase, and contain only

alpha characters (no numbers).

All queries must include your Access Key followed by the IP address you are

seeking information about (in reverse-octet format) followed by the List-

Specific Domain you are querying. Imagine, for example, you are querying

for information about the IP address 127.9.1.2 and your Access Key is

abcdefghijkl, then the format of your query should be constructed as follows:

abcdefghijkl.2.1.9.127.dnsbl.httpbl.org

[Access Key] [Octet-Reversed IP] [List-Specific Domain]

Two important things to note about the IP address in the query: First, the IP

address is of the visitor to your Web site about which you are seeking infor-

mation; second, the IP address must be in reverse-octet format. This means

that if the IP address 127.9.1.2 visits your Web site and you want to ask

http:BL for information about it, you must first reverse the IP address to be

formatted as 2.1.9.127.

Note that if you reverse the order of the octets (the numbers separated by

the periods) you do not reverse the IP address entirely. For example, if you

were querying the IP address 10.98.76.54, the following are examples of

correct and incorrect examples of reverse-octet format:

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 20

Value Meaning

0 Search Engine

1 Suspicious

2 Harvester

4 Comment Spammer

8 [Reserved for Future Use]

16 [Reserved for Future Use]

32 [Reserved for Future Use]

64 [Reserved for Future Use]

128 [Reserved for Future Use]

;LOGIN: FEBRUARY 2008 HTTP:BL 21

Query: 10.98.76.54

Right: 54.76.98.10

Wrong: 45.67.89.01

Three scenarios exist for responses from the http:BL service. The cases in-

clude (1) not listed, (2) listed, and (3) known search engine. A majority of

IP addresses do not appear in http:BL’s records. If the IP queried does not

appear, http:BL will return a nonresult {NXDOMAIN}. A query for a listed

entry or search engine will receive a reply from the DNS server in IPv4 for-

mat with three of the four octets containing data to provide information

about the visitor. The intention is for this to allow flexibility in how the Web

site administrator treats the visitor rather than a simple black-and-white re-

sponse (e.g., the administrator may want to treat known harvesters differ-

ently from known comment spammers, by blocking the former from seeing

email addresses while blocking the later from POSTing to forms).

Responses for listed entries will have one of two predefined formats depend-

ing on whether the entry is for a known search engine or for a malicious bot.

The fourth octet represents the type of visitor. Defined types include “search

engine,” “suspicious,” “harvester,” and “comment spammer.” Because a visi-

tor may belong to multiple types (e.g., a harvester who is also a comment

spammer) this octet is represented as a bitset with an aggregate value from 0

to 255. A chart outlining the different types is shown in Table 1. This value is

useful because it allows you to treat different types of robots in different ways.

TABLE 1

Because the fourth octet is a bitset, visitors who have been identified as

falling into multiple categories may be represented. See Table 2 for an expla-

nation of the current possible values.

IPs are labeled as “suspicious” if they engage in behavior that is consistent

with a malicious robot but malicious behavior has not yet been observed.

For example, on average it takes a harvester nearly a week from when it

finds an email address to when it sends the first spam message to that ad-

dress. In the meantime, the as-of-yet-unidentified harvester’s IP address is

seen hitting a number of honeypots, not obeying rules such as those set

forth by robots.txt, and otherwise behaving suspiciously. In this case, the IP

may be listed as suspicious.

The third octet represents a threat score for the queried IP. This score is as-

signed internally by Project Honey Pot based on a number of factors, such as

the number of honeypots the IP has been seen visiting and the damage done

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 21

TABLE 2

during those visits (email addresses harvested or forms posted to). The

score ranges from 0 to 255, where 255 is extremely threatening and 0 indi-

cates that no threat score has been assigned.

Project Honey Pot assigns threat scores to IP addresses observed on the Proj-

ect Honey Pot network as part of the http:BL service. Threat scores are a

rough guide to determine the threat that a particular IP address may pose

and therefore should be treated as a rough measure. Although threat scores

range from 0 to 255, they follow a logarithmic scale, which makes it ex-

tremely unlikely that a threat score over 200 will ever be returned.

Different threats calculate threat scores slightly differently. For example, a

threat score of 25 for a harvester is not necessarily as threatening as a threat

score of 25 for a comment spammer. A harvester’s threat score is determined

based on its reach (the number of honeypots it has hit), its damage (the

number of email messages that have resulted from its harvests), its activity

(the frequency of visits over a period of time), and other factors.

The second octet represents the number of days since the last activity was

observed by the IP on the Project Honey Pot network. This value ranges

from 0 to 255 days. This octet is useful in helping you assess how stale the

information provided by http:BL is and, therefore, the extent to which you

should rely on it.

The first octet is always 127 and is predefined to not have a specified mean-

ing related to the particular visitor.

The following is an example of a hypothetical query and hypothetical re-

sponse, which will be referenced throughout the rest of this section:

Query: abcdefghijkl.2.1.9.127.dnsbl.httpbl.org

Response: 127.3.5.1

The response means the visitor has exhibited suspicious behavior on the

Project Honey Pot network, has a threat score of 5, and was last seen by the

project’s network 3 days ago.

Search engines represent a special case. Known search engines will always

return a value of zero as the last octet. It is not possible for a search engine

to be both a search engine and some kind of malicious bot. Search engines

found to be harvesting or comment spamming will cease to be listed as

search engines.

In the case of a known search engine indicated by the fourth octet being 0,

the third octet becomes a serial number identifier for the specific search en-

Value Meaning

0 Search Engine (0)

1 Suspicious (1)

2 Harvester (2)

3 Suspicious & Harvester (1+2)

4 Comment Spammer (4)

5 Suspicious & Comment Spammer (1+4)

6 Harvester & Comment Spammer (2+4)

7 Suspicious & Harvester & Comment Spammer (1+2+4)

>7 [Reserved for Future Use]

22 ;LOGIN: VOL. 33, NO. 1

login_february08-articles:login June 06 Volume 31 1/17/08 11:03 AM Page 22

;LOGIN: FEBRUARY 2008 HTTP:BL 23

gine. The second octet is reserved for future use.

With the launch of the http:BL service, Project Honey Pot released the

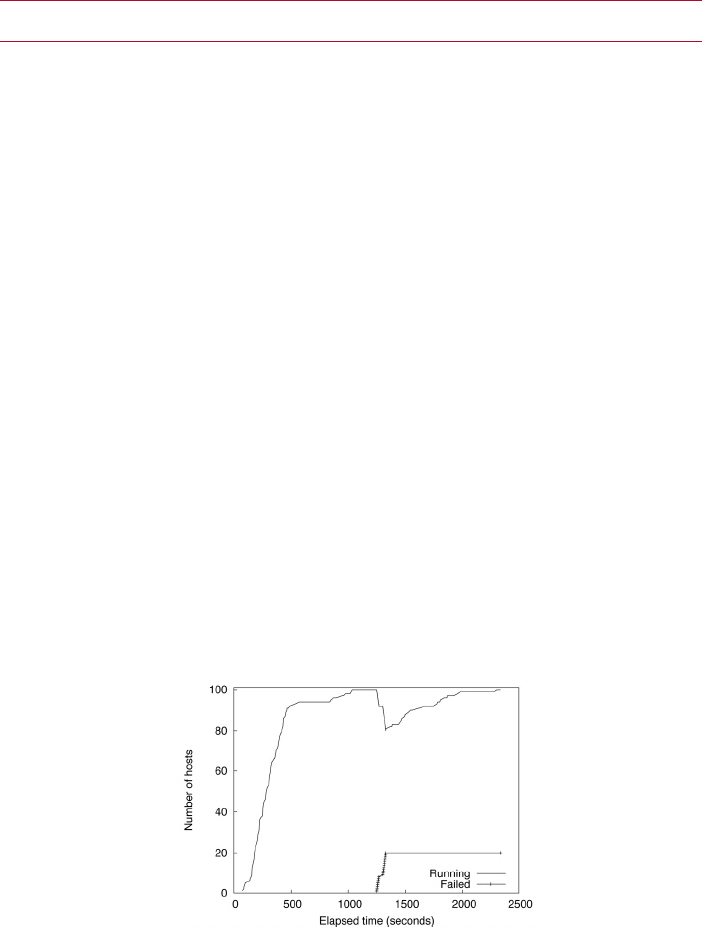

module mod_httpbl Apache. The mod_httpbl module provides an efficient